In the first part of this blog series, NSX-T: Routing where you need it (Part 1), I discussed how East-West (E-W) routing is completely distributed on NSX-T and how routing is done by the Distributed Router (DR) running as a kernel module in each hypervisor.

In this post, I will explain how North-South (N-S) routing is done in NSX-T and we will also look at the ECMP topologies. This N-S routing is provided by the centralized component of logical router, also known as Service Router. Before we get into the N-S routing or packet walk, let’s define Service Router.

Service Router (SR)

Whenever a service which cannot be distributed is enabled on a Logical Router, a Service Router (SR) is instantiated. There are some services today on NSX-T which are not distributed such as:

1) Connectivity to physical infrastructure

2) NAT

3) DHCP server

4) MetaData Proxy

5) Edge Firewall

6) Load Balancer

Let’s take a look at one of these services (connectivity to physical devices) and see why a centralized routing component makes sense for running this service. Connectivity to physical topology is intended to exchange routing information from NSX domain to external networks (DC, Campus or WAN). In a datacenter leaf and spine topology or any other datacenter topology, there are designated devices that peer with WAN routers to exchange routes in BGP and provide N-S connectivity. To avoid exponential growth of BGP peerings from each hypervisor and reduce complexity of control plane, a dedicated routing component (Service Router) is designed to serve the need.

NSX-T achieves best of both worlds with DR/SR approach, distributed routing (in kernel) for E-W traffic, centralized routing for N-S traffic and other centralized services.

Introducing the Edge node:

We need a centralized pool of capacity to run these services in a highly-available and scale-up fashion. These appliances where the centralized services or “SR Instances” are hosted are called Edge nodes. They are available in two form factors: Bare Metal or VM(both leveraging Linux Foundation’s DPDK Technology).

So, when a logical router is connected to physical infrastructure, a SR is instantiated on the edge node. Similarly, when a centralized service like NAT is configured on logical router, a SR or service instance for that particular logical router is instantiated on the Edge node. Edge nodes (all VM or all Baremetal) can be logically grouped into an Edge cluster to provide scale out, redundant, and high-throughput gateway functionality for logical networks.

The following diagram shows a typical leaf and spine topology with Edge nodes providing connectivity to the physical infrastructure. As shown in the diagram, Distributed Router (DR) component of a Logical router is instantiated on all the transport nodes (Compute hypervisors and Edge nodes). To provide connectivity to the physical infrastructure, SR has been instantiated on the Edge nodes. These Edge nodes are also configured as Transport nodes and are assigned a TEP (Tunnel End Point) IP just like compute hypervisors to send/receive overlay traffic to/from compute hypervisors. As shown in the diagram below, traffic from a VM hosted on a compute hypervisor goes through the Edge node on a overlay network, to connect to a device in physical infrastructure.

![]()

Figure 1: Physical Topology showing Compute hypervisors and Edge Node

Before we get into a detailed packet walk for this N-S traffic, it is imperative to understand the architecture details as to how the two routing components (DR and SR) are connected.

As soon as a logical router is configured via NSX-T manager, a DR is instantiated in all transport nodes. When we enable a centralized service on that logical router, a SR is instantiated. An internal link called Intra-Tier Transit link is auto created using a Transit Logical switch between DR and SR. This link defaults to an IP address in 169.254.0.0/28 subnet. Neither you have to configure this transit logical switch nor configure IP addressing on the link (unless, user wants to change the subnet range) nor configure any routing between DR and SR. All of this is auto plumbed, meaning that we take care of this in background.

Moving on, let’s take a look at the interfaces and how we are taking care of this routing in background. Following is the logical view of a Logical router showing both DR and SR components when connected to a physical router

![]()

Figure 2: Logical Topology showing Logical router components (DR & SR)

As shown in the diagram above, following are the interfaces on the logical router.

- Uplink: Interface connecting to the physical infrastructure/router. Static routing and EBGP are supported on this interface. Both static routing and EBGP can leverage BFD.

- Downlink: Interface connecting to a logical switch.

- Intra-Tier Transit Link: Internal link between the DR and SR, using a transit logical switch.

The following output from an Edge node shows both components of a logical router, DR and SR. Observe the Intra-Tier Transit link on DR with an IP of 169.254.0.1 and on SR with an IP of 169.254.0.2.

![]()

Figure 3: DR/SR interfaces

Routing between SR and DR

Let’s look at how routing works between DR and SR. We are not running any routing protocol on the Intra-Tier transit link. As soon as SR is instantiated, Management plane configures a default route on DR with the next hop IP address of SR’s Intra-Tier transit link IP, which is 169.254.0.2 in this case.

This allows the DR to take care of E-W routing while the SR provides N-S connectivity to all the subnets connected to the DR.

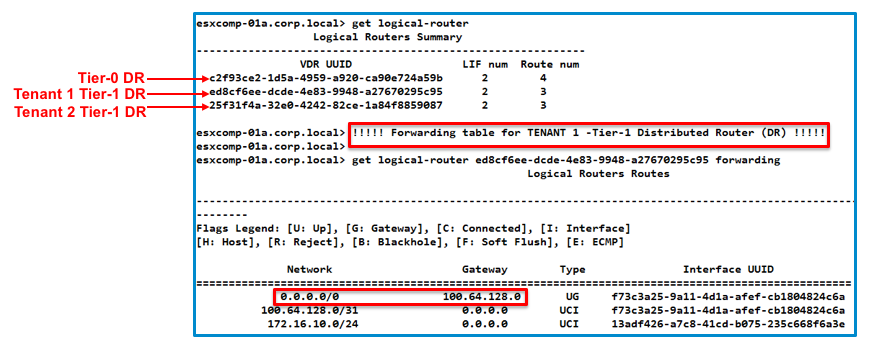

The following output from ESXi host, DR shows the default route with a gateway or next hop IP of SR, 169.254.0.2.

![]()

Figure 4: DR Routing table showing default route to SR

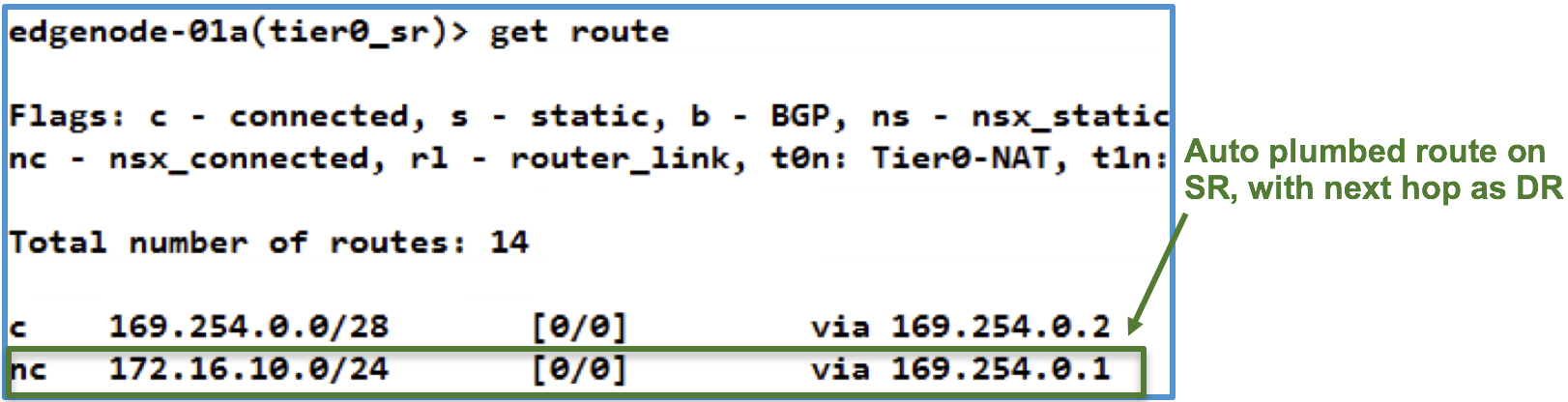

Management plane also creates routes on SR for the subnets connected to DR, with a next hop IP of DR’s Intra-Tier transit link, which is 169.254.0.1 in this case.

These routes are seen as “NSX Connected” routes on SR. The following output from Edge node shows the routing table of SR. Observe that 172.16.10.0/24 is seen as NSX connected route with DR as next hop, 169.254.0.1.

![]()

Figure 5: SR Routing table

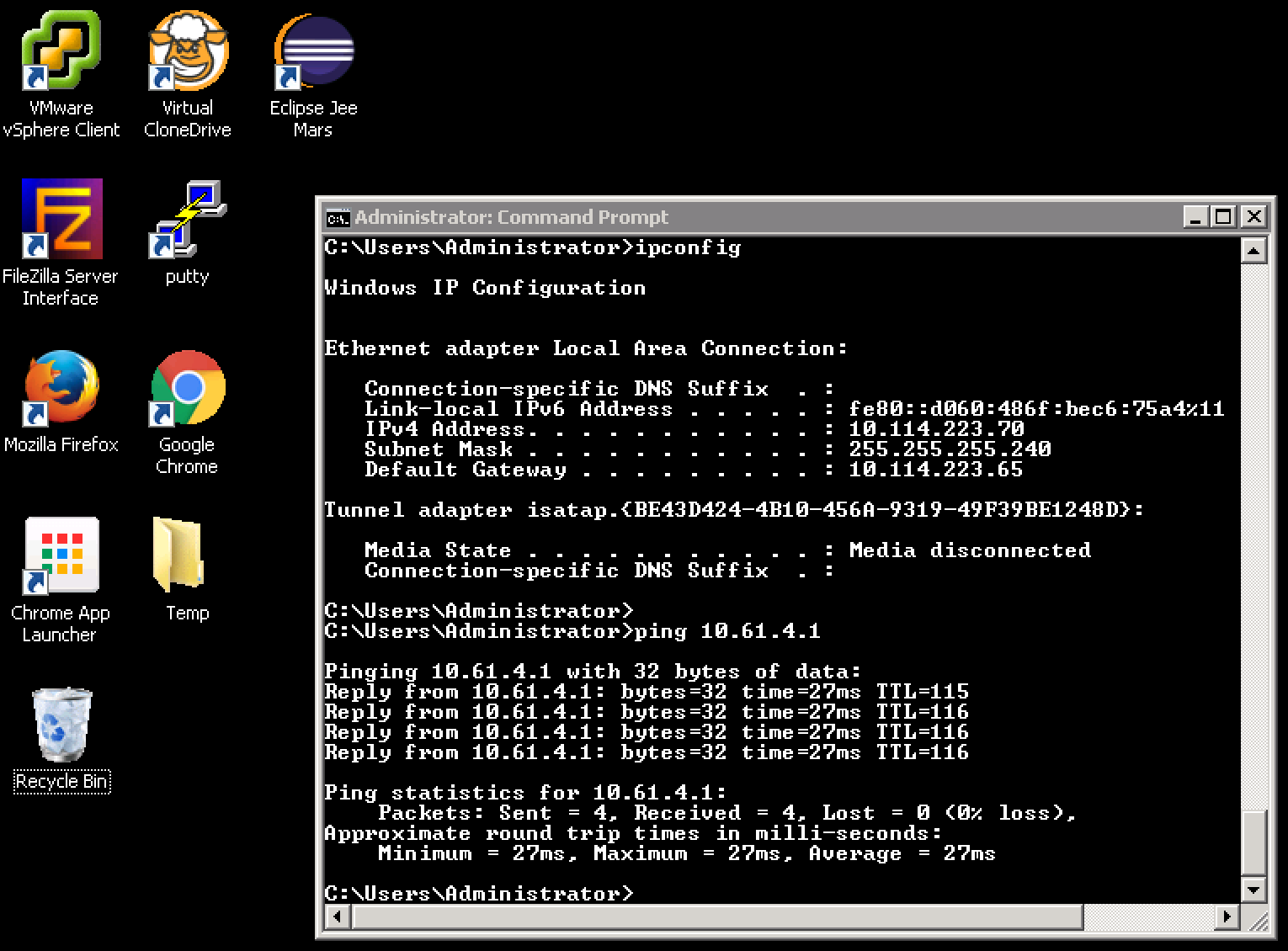

Let’s take a look at this N-S traffic flow in detail. In the following topology, I have a Web VM hosted on a ESXi hypervisor and it needs to communicate with a device external to the datacenter.

As mentioned before, an Edge node is a device that provides connectivity to the physical infrastructure. In this example, BGP peering has been established between the physical router interface with an IP address, 192.168.240.1 and an SR hosted on the edge node with an IP address on uplink of 192.168.240.3. The physical router learns 172.16.10.0/24 prefix in BGP with the next hop as SR on Edge node, i.e. 192.168.240.3 and SR learns 192.168.100.0/24 in BGP with next hop as physical router, i.e. 192.168.240.1.

![]()

Figure 6: Packet walk from VM in Datacenter to Physical Infrastructure

- Web1 VM (172.16.10.11) sends a packet to 192.168.100.10. The packet is sent to the Web1 VM default gateway interface located on the local DR i.e. 172.16.10.1.

- Packet is received on local DR. The destination 192.168.100.10 is external to Datacenter and hence, this packet needs to go to the Edge node that has connectivity to the physical infrastructure. DR has a default route (refer figure 4) with next hop as its corresponding SR which is hosted on Edge Node. DR sends this packet to SR & since SR is located on the Edge node, this packet would need to be encapsulated in GENEVE and sent out.

- Edge node is also a Transport node which implies that it will encapsulate/decapsulate the traffic sent to or received from Compute hypervisors. ESXi TEP encapsulates the original packet and sends it to the Edge Node TEP with a Outer Src IP=192.168.140.151, Dst IP=192.168.140.160.

Following is the packet capture from the ESXi host post encapsulation. Observe the VNI 0x005389, decimal equivalent 21385. This VNI was auto assigned to the link between DR and SR. Also, observe the inner Source and destination MAC address of the packet. Inner source MAC address is of the Intra-Tier transit link and inner destination MAC is that of SR Intra-Tier transit link.

![]()

Figure 7: Packet capture on ESXi after GENEVE encapsulation

Why am I looking at the DR on the Edge node for the Source MAC address? Remember, DR is identical on all transport nodes including edge nodes, same IP addresses & same MAC address.

4. Edge Node TEP decapsulates the packet and removes the outer header upon receiving the packet and packet is sent to SR (as the destination MAC address in the inner header is of SR)

5. SR does a routing lookup which determines that the route 192.168.100.0/24 is learnt via Uplink port with a next hop IP address 192.168.240.1 i.e. physical router.![]()

Figure 8: SR Routing table

6. Packet is sent on external vlan to Physical router and is delivered to 192.168.100.1.

What’s important to note here is that the routing always happens at the source hypervisor. In this case, routing was done on source hypervisor i.e. ESXi to determine that the packet needs to be sent to SR hosted on Edge node. Hence, no such lookup was required on the DR on Edge node. After removing the tunnel encapsulation on Edge Node, packet was sent directly to SR.

Let’s look at the return packet from 192.168.100.1 to Web VM.

![]()

Figure 9: Packet walk from Physical Infrastructure to VM in Datacenter

- External device 192.168.100.1 sends the return packet to Web1 VM (172.16.10.11) following the BGP route that was learnt via SR on Edge node.

- Routing lookup happens on SR which determines that 172.16.10.0/24 is reachable via DR 169.254.0.1 (refer Figure 8), traffic is sent to local DR via Intra Tier transit link between SR and DR.

- DR does a routing lookup which determines that the destination subnet 172.16.10.0/24 is a directly connected subnet and it’s on LIF1 i.e the interface connected to Web-LS. A lookup is performed in LIF1 ARP table to determine the MAC address associated with Web1 VM IP address. This destination MAC, MAC1 is learnt via remote TEP 192.168.140.151 i.e. ESXi host where Web1 VM is hosted.

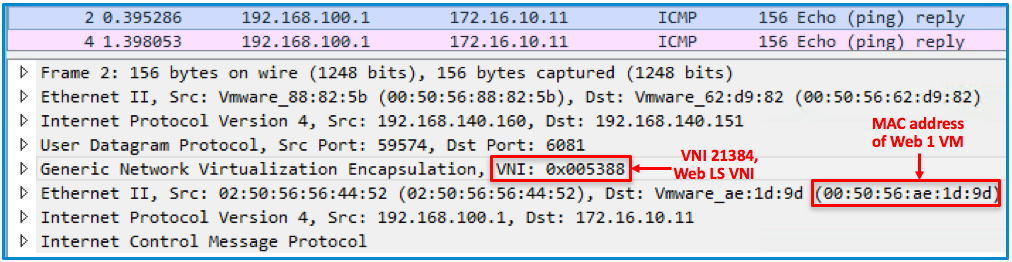

- Edge node TEP encapsulates the original packet and sends it to the remote TEP with a Outer Src IP=192.168.140.160, Dst IP=192.168.140.151. The destination VNI (virtual network identifier) in this GENEVE encapsulated packet is of Web LS (21384).

![]()

Figure 10: Packet capture for return traffic

5. ESXi host decapsulates the packet and removes the outer header upon receiving the packet. A L2 lookup is performed in the local MAC table associated to LIF1.

6. Packet is delivered to Web1 VM.

What’s important to note here is that the routing always happens at the source hypervisor. In this case, routing was done DR hosted on the Edge node. Hence, no such lookup was required on the DR hosted on ESXi hypervisor and packet was sent directly to the VM after removing the tunnel encapsulation header.

What we have configured so far is a called a Single Tiered topology. Observe that I have named the logical router as Tier-0. Tier-0 is the logical router that connects to logical switches or logical routers southbound or and physical infrastructure northbound. Following diagram shows a single tiered routing logical topology. Again, since the Tier-0 logical router connects to the physical infrastructure, a Tier-0 SR is instantiated on the Edge node and Tier-0 DR is distributed across all transport nodes.

![]()

Figure 11: Single Tiered Routing Topology

ECMP

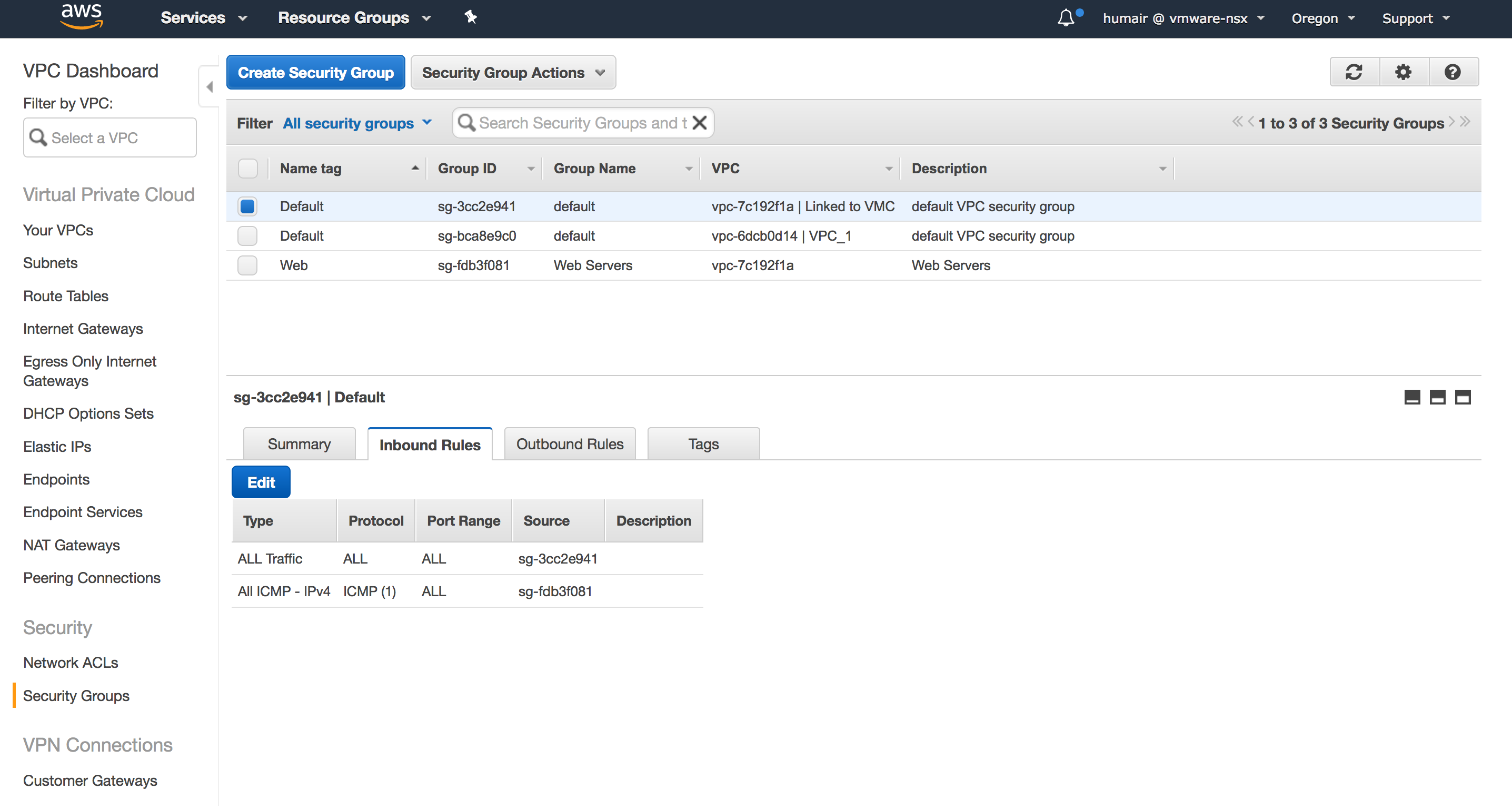

Now that we understand the basics of logical router components (DR and SR) and how SR is hosted on Edge node, let’s provide redundancy for N-S traffic.

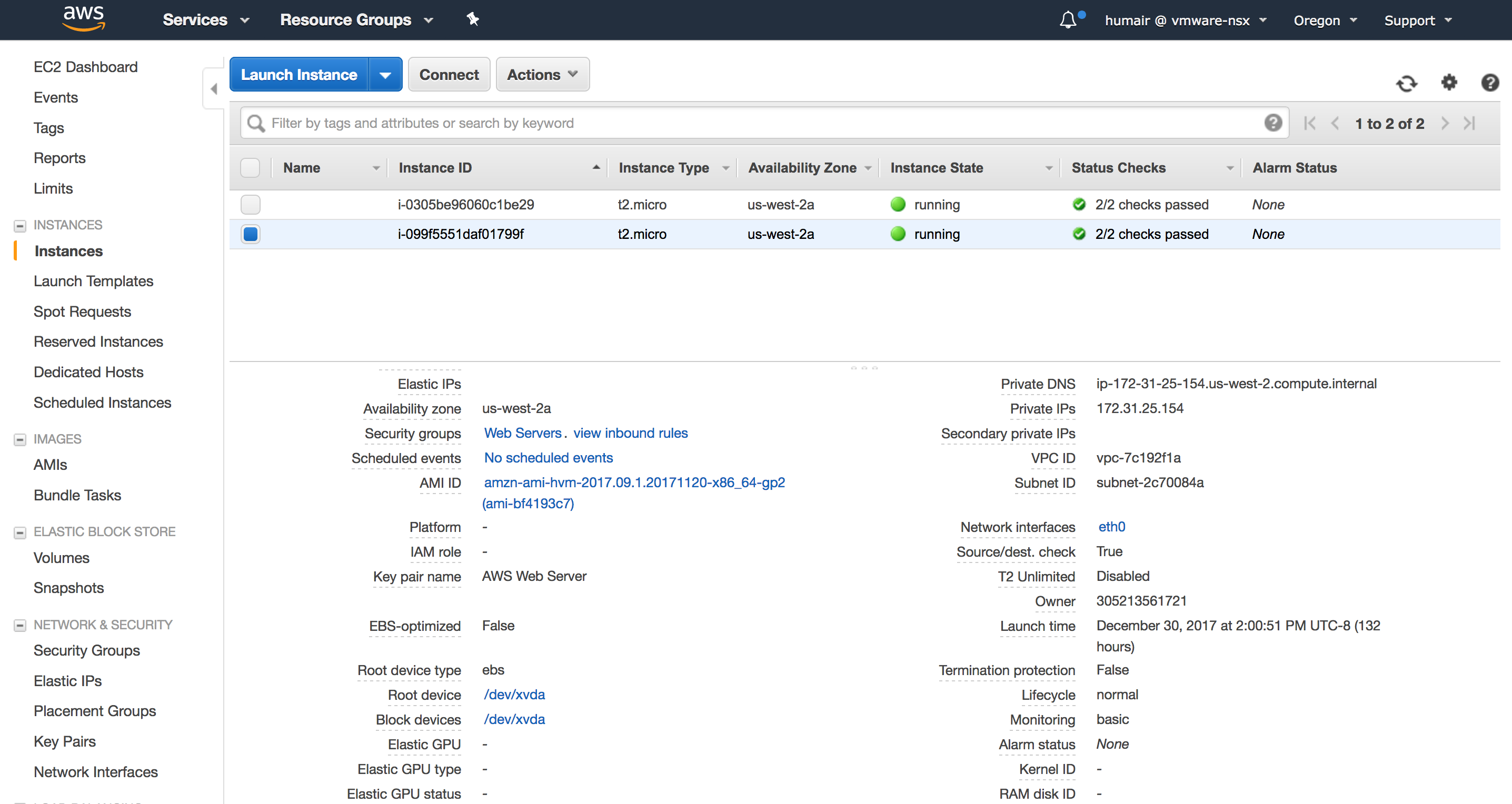

If we just have one Edge node with one uplink connected to the TOR and this Edge node fails, then N-S traffic would fail too. We need to have redundancy for the Edge node where the SR is instantiated so that if one Edge node fails, SR hosted on the other Edge node continues to forward N-S traffic or continues to provide other centralized services, if configured. In addition to provide redundancy, this other edge node is required to service the bandwidth demand.

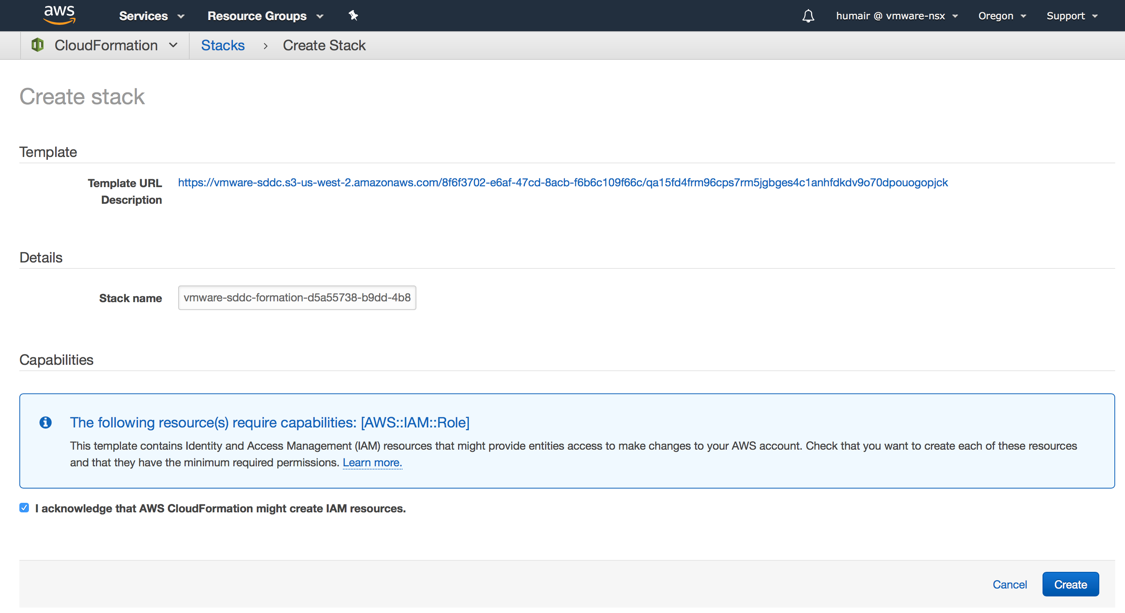

To demonstrate ECMP, I have configured another Edge node EN2 that has an uplink connected to another physical router. This Edge node EN2 is added in an Edge cluster that has EN1.

BGP has also been established between the physical router 2 interface with an IP address, 192.168.250.1 and an SR hosted on the edge node with an IP address on uplink of 192.168.250.3.

Just to recap on SR/DR concept, this Tier-0 Logical router would have a Tier-0 DR running as a kernel module on all transport nodes, i.e. ESXi hypervisor and both Edge nodes (EN1 and EN2). Since this Tier-0 connects to a physical router using Uplinks on EN1 and EN2, a Tier-0 SR would be instantiated on both EN1 and EN2.

The following figure shows a logical topology showing an edge cluster that has two Edge nodes EN1 and EN2. Tier-0 SR is configured in an active/active high availability mode to provide ECMP.

![]()

Figure 12: Single Tiered Routing ECMP Topology

The following output from Tier-0 DR on ESXi host shows two default routes learnt from Tier-0 SR on EN1 (169.254.0.2) and Tier-0 SR on EN2 (169.254.0.3). N-S traffic is load balanced using both the default routes installed on the ESXi hypervisor. Tier-0 SR hosted on EN1 has a BGP route to 192.168.100.0/24 with next hop as Physical Router 1, 192.168.240.1 and Tier-0 SR hosted on EN2 has the same route via Physical Router 2, 192.168.250.1.

Tier-0 SR on EN1 and EN2 is also advertising the Web LS subnet 172.16.10.0/24 in BGP to both physical routers, so that both of the uplinks can be utilized for the incoming traffic.

![]()

Figure 13:ECMP

This concludes single tiered routing in NSX-T. I will discuss multi-tiered routing architecture in the next blog.

Learn More

https://docs.vmware.com/en/VMware-NSX-T/index.html

The post NSX-T: Routing where you need it (Part 2, North-South Routing) appeared first on Network Virtualization.

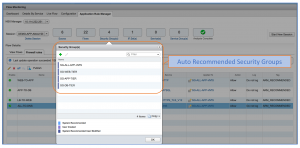

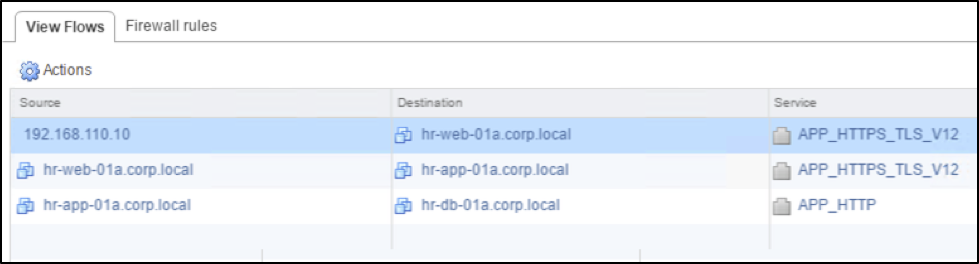

Figure: ARM recommended Security groups for 3-tier application

Figure: ARM recommended Security groups for 3-tier application

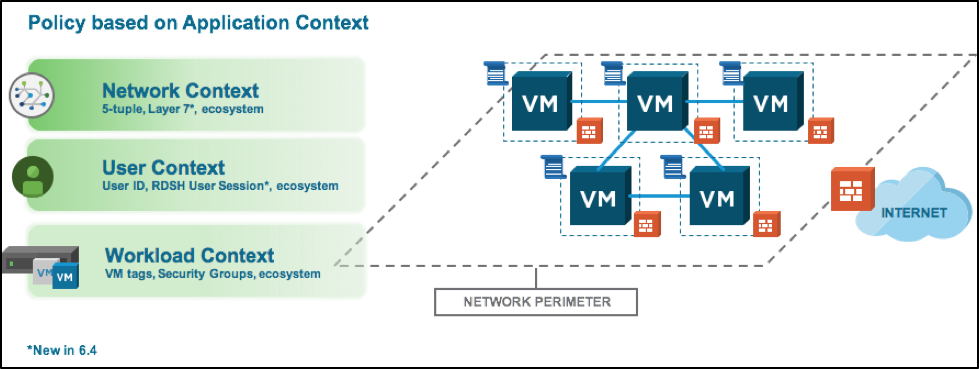

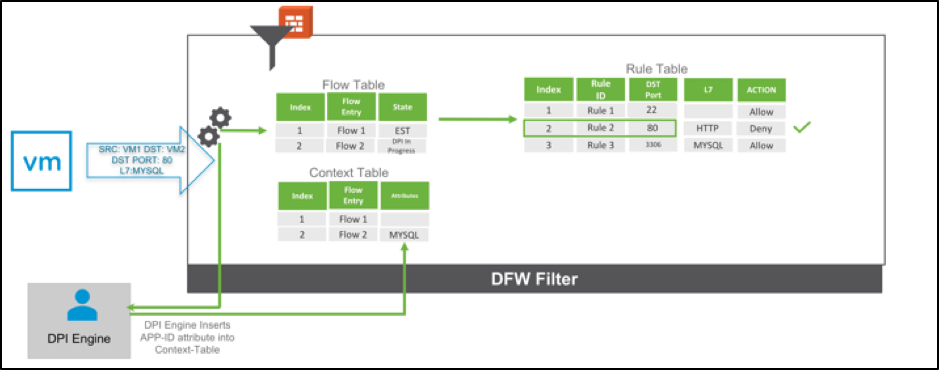

Figure: NSX drives policy based on Network, User and Workload Context

Figure: NSX drives policy based on Network, User and Workload Context

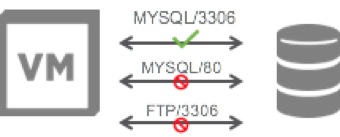

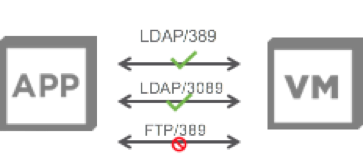

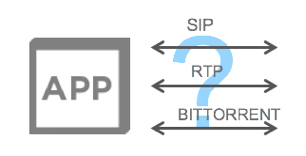

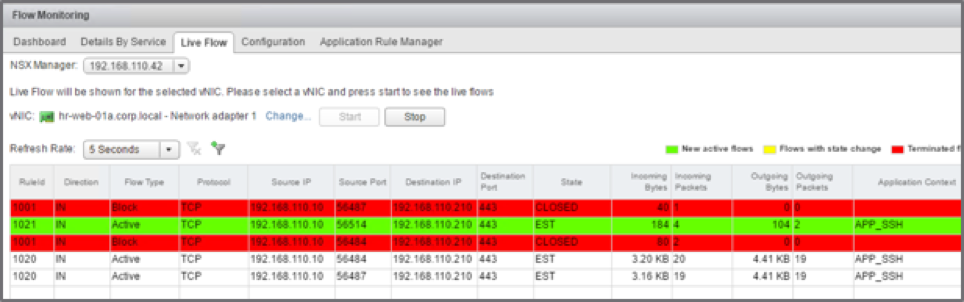

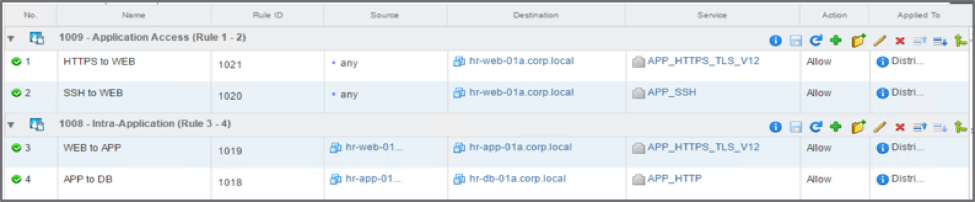

Figure: Intra-application flows identified based on Layer 7 Application Rule Manager

Figure: Intra-application flows identified based on Layer 7 Application Rule Manager

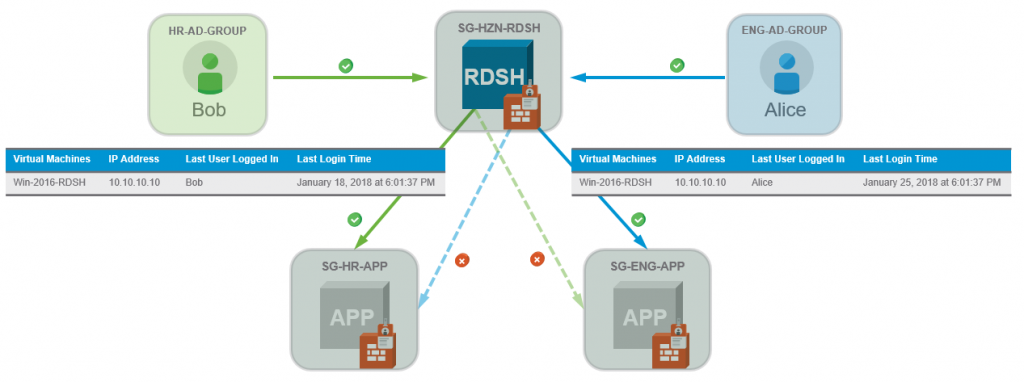

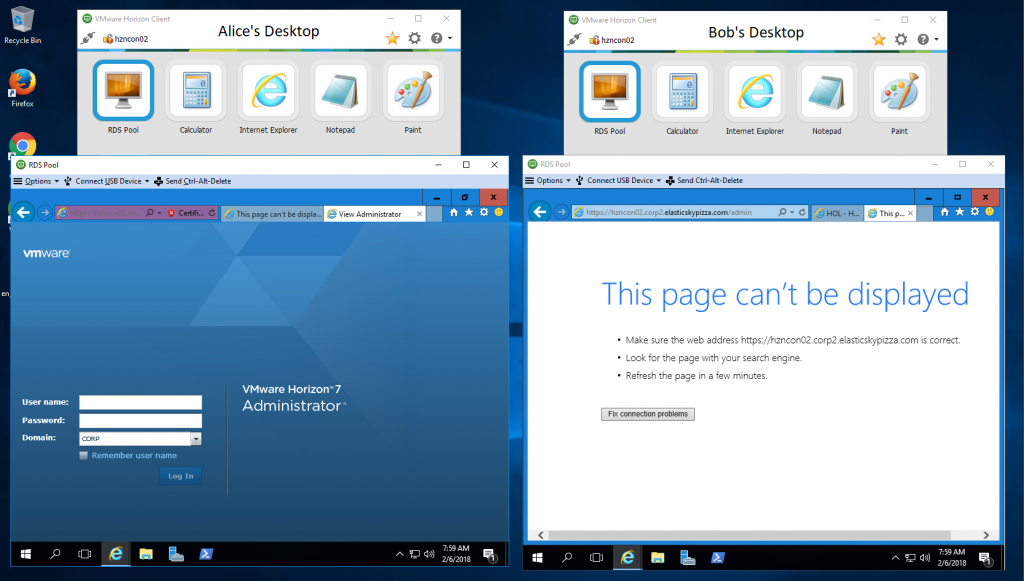

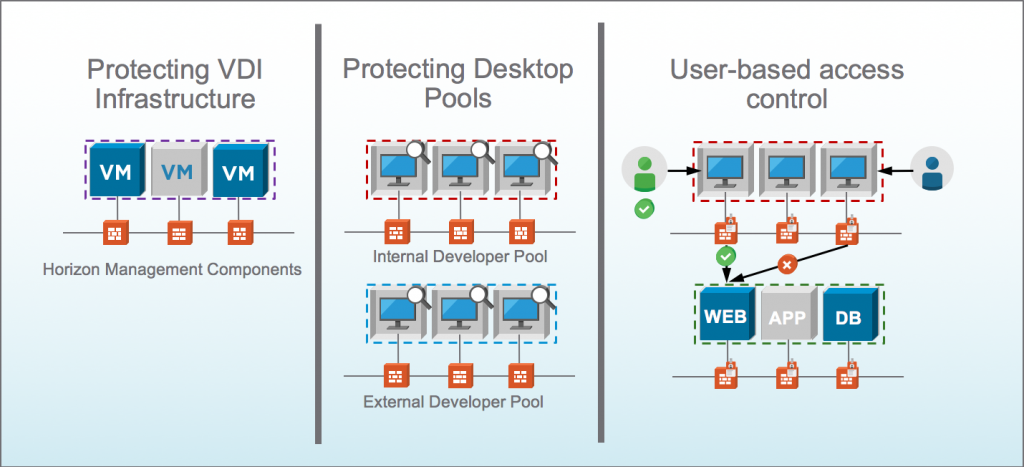

Figure: Virtual Desktop Infrastructure and Virtual User Sessions

Figure: Virtual Desktop Infrastructure and Virtual User Sessions

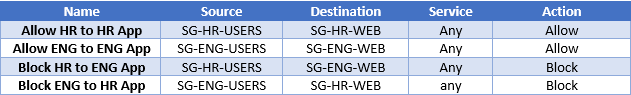

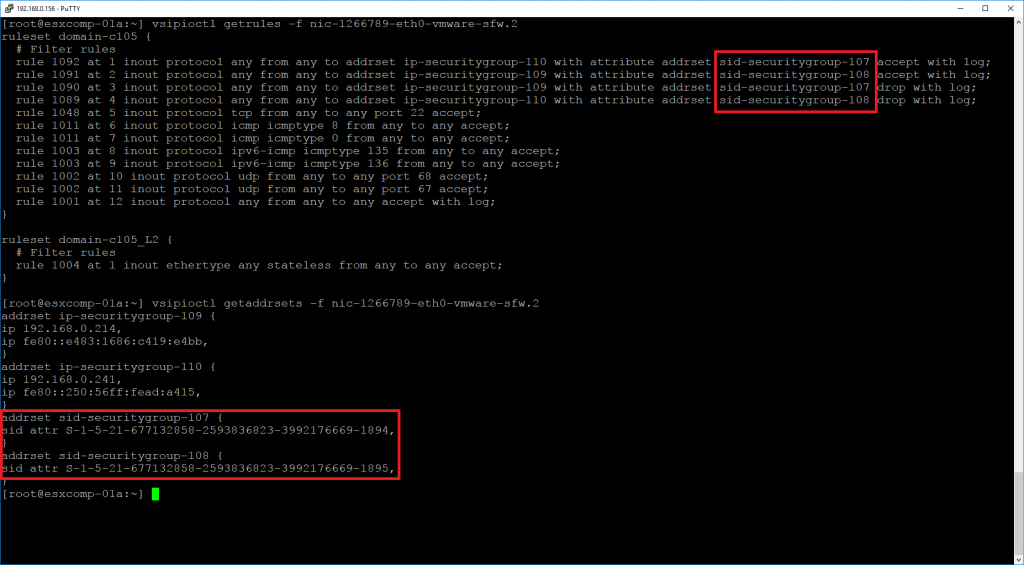

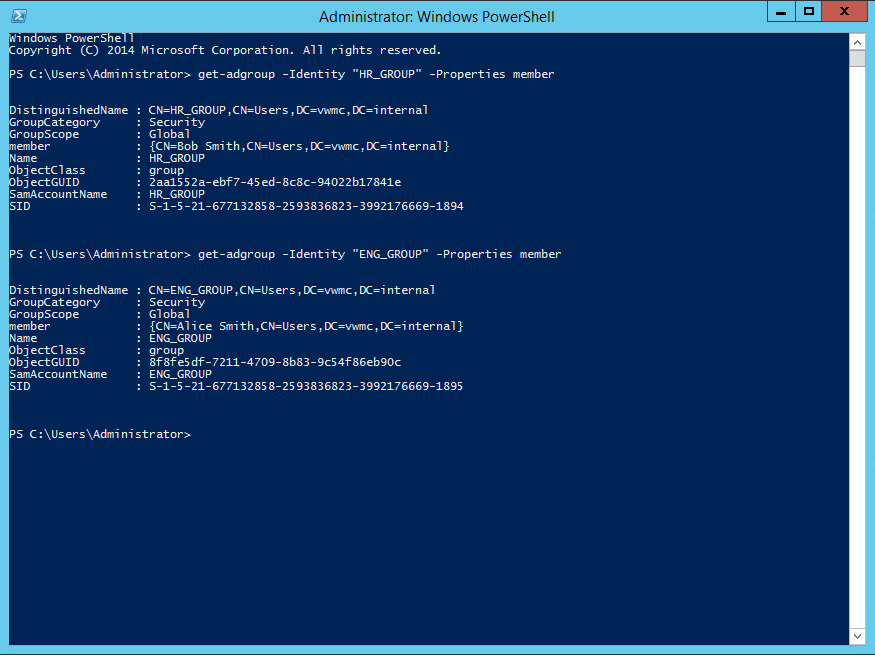

Figure: Defining a Security Group based on Directory Group Membership

Figure: Defining a Security Group based on Directory Group Membership