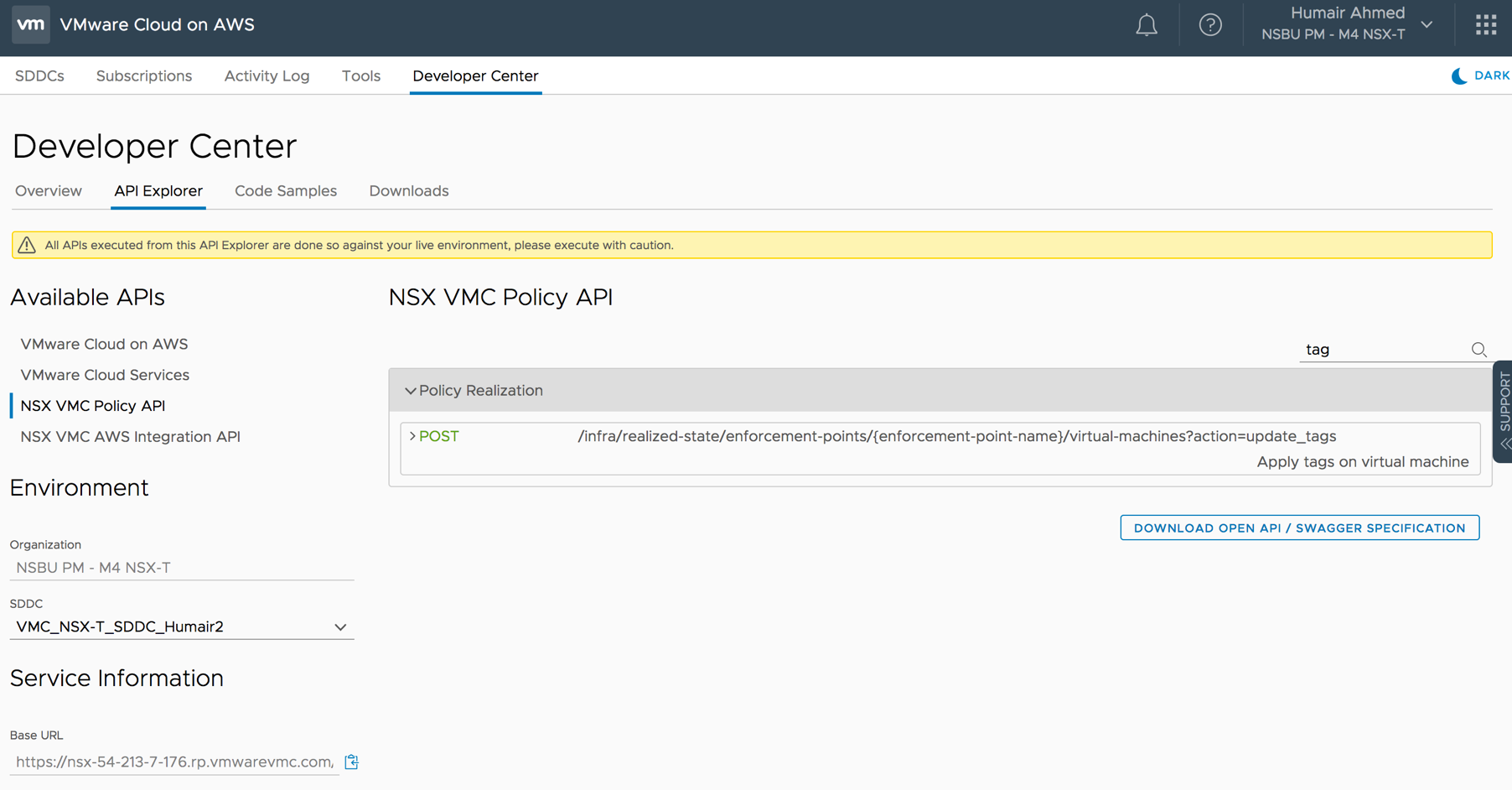

The focus of this blog is VMware Enterprise PKS and Kubernetes Operations with NSX-T Data Center. For the sake of completion, I will start with a high level NSX-T deployment steps without going too much into the details.

This blog does not focus on NSX-T Architecture and Deployment in Kubernetes or Enterprise PKS environments, but it highlights some of those points as needed.

Deploying NSX-T Data Center

There are multiple steps that are required to be configured in NSX-T before deploying Enterprise PKS. At a high level, here are the initial steps of installing NSX-T:

- Download NSX-T Unified Appliance OVA.

- Deploy NSX-T Manager (Starting from NSX-T 2.4, three managers could be deployed with a Virtual IP).

- Add vCenter as a Compute Manager in NSX-T

- Deploy NSX-T Controllers. (Starting from NSX-T 2.4 the controllers are merged with NSX-T manager in a single appliance)

- Deploy one or more pairs of NSX-T Edges with a minimum of Large Size. (Large Size is required by Enterprise PKS, Bare-Metal Edges could be used too).

- Install NSX Packages on ESXi Hosts

- Create an Overlay and a VLAN Transport Zones.

- Create a TEP IP Pool.

- Add ESXi Hosts as a Transport nodes to the Overlay Transport Zone

- Add the NSX-T Edge as a Transport Node to the Overlay and VLAN Transport Zone.

All above steps are standard NSX-T Deployment steps and can be achieved from the NSX-T Manager UI.

More info on NSX-T could be found here.

Preparing NSX-T for Enterprise PKS

Before we install PKS, there are multiple objects that we need to create before that. Here is a list for those objects:

- Tier-0 Router and establish connectivity to the physical network using BGP or Static routes.

- Pods-IP-Block for Kubernetes Pods Internal IP Addresses. (/16 Block)

- Nodes-IP-Block for Kubernetes nodes (VMs) IP addresses (/16 Block)

- IP-Pool-VIPs for external connectivity and load balancing VIPs. This Pool should be routable in the physical infra. Starting NSX-T 2.4, this Pool could be on the same subnet as the T0-Router Uplink Interface, which simplifies routing in and out the environment. Before NSX-T 2.4, we had to use BGP or create a Static Route to this IP Pool, which sometimes is challenging in a lab environment. Now it could be directly connected to the Physical Network which remove the need for dynamic or static routing. (typically /24, but smaller subnets could be used)

- Generating and Registering the NSX Manager Certificate for PKS (steps)

- Generating and Registering the NSX Manager Superuser Principal Identity Certificate and Key (steps).

- (Optional) Tier-1 Router and Logical switch for PKS Management components*

- (Optional) NAT Items*

*Optional steps depend on the design. PKS management VMs could be connected to an NSX-T Logical Switch, or they could be connected to a VDS dPG outside of NSX-T.

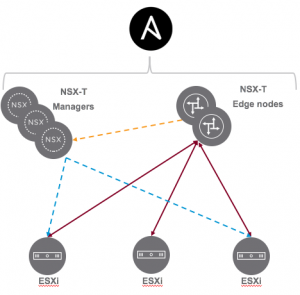

In case they are connected to a VDS dPG, then we cannot apply NAT to K8s-Nodes IP addresses to avoid breaking the connectivity between the Kubernetes Clusters Nodes VMs and Enterprise PKS management. This is called No-NAT Topology.

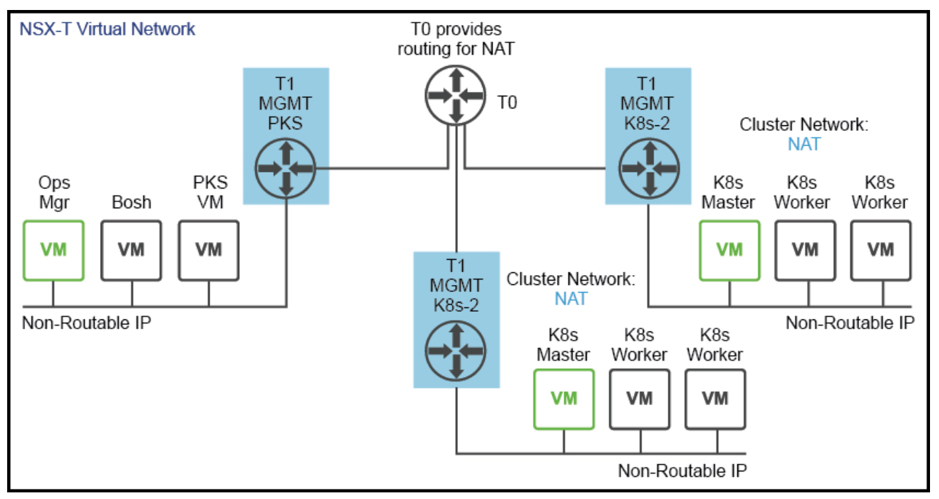

No-NAT Topology

![]()

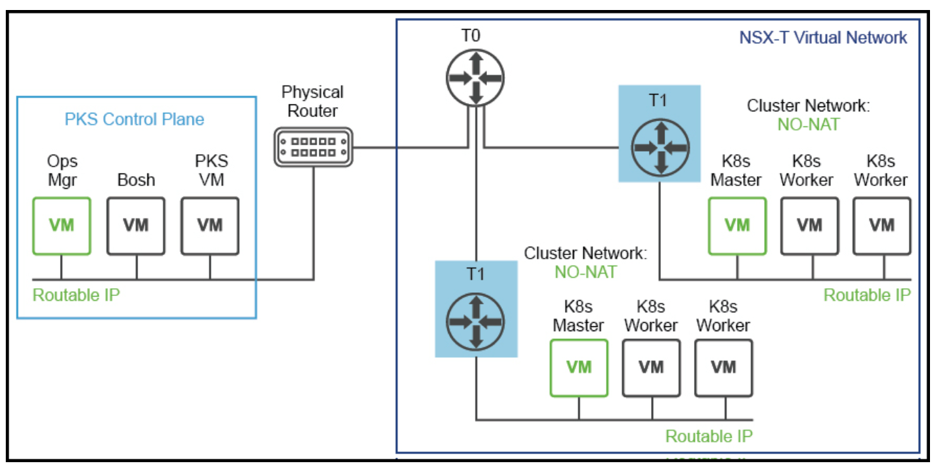

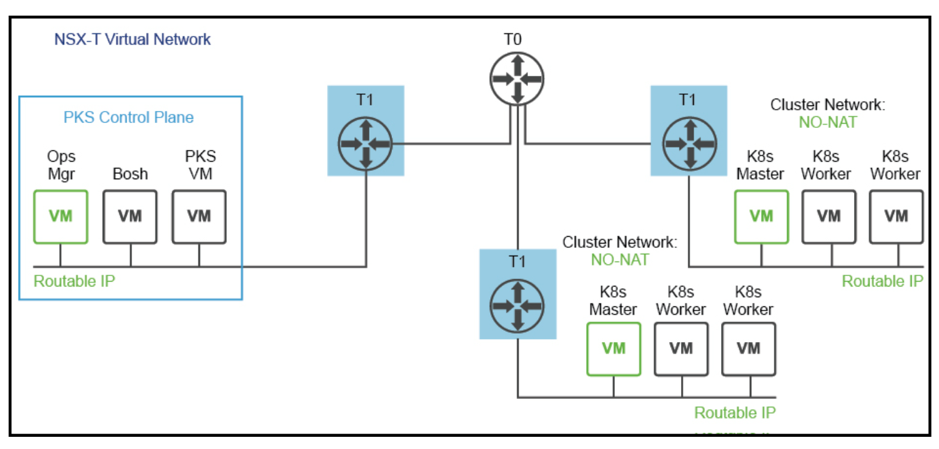

If PKS management VMs are connected to an NSX-T Logical switch, then we have the choice to NAT or No-NAT K8s-Nodes IP addresses to save on routable IP addresses. This is called NAT Topology or No-NAT with NSX-T.

Direct Routing for Kubernetes Nodes![]()

NAT Kubernetes Nodes![]()

NAT or No-NAT mode could be configured during Enterprise PKS Installation

![]()

More info on PKS design could be found here.

Preparing NSX-T for Kubernetes (non-PKS)

Enterprise PKS automates the creation and configuration of NSX Container Plugin (NCP) and other NSX related components. Those steps will need to be done manually in non-PKS environments. We will need to perform similar steps as we did in Enterprise PKS. The only difference is that we don’t need a K8s-Nodes-Block since we are talking about a single Kubernetes Cluster. On top of that we need to install OVS, NSX Node Agent, and NCP. More details could be found here.

VMware Enterprise PKS Network Profiles

This section describes how to use network profiles when creating a Kubernetes cluster with VMware Enterprise PKS on vSphere with NSX-T. Network profiles will allow customizing NSX-T configuration parameters while creating the Kubernetes Cluster.

Network Profiles are optional in Enterprise PKS, they allow the administrator to customize the networking setting for a Kubernetes cluster instead of using the default values configured during Enterprise PKS initial installation.

To assign a Network Profile, three steps are required:

- Define a Network Profile JSON File

- Create a Network Profile using the JSON File.

- Create a Kubernetes Cluster with the created Network Profile.

Define a Network Profile JSON File

The following parameters could be defined on the Network Profile as of Enterprise PKS 1.4:

- Load Balancer Sizing

- Customer Pod Networks

- Routable Pod Networks

- Bootstrap Security Groups for Kubernetes Master Nodes

- Pod Subnet Prefix

- Custom Floating IP Pool

- DNS Configuration for Kubernetes Cluster

- T0-Router selection

JSON file Sample below

k8s-dev-cluster-np.json

{

"name": "k8s-dev-cluster-np",

"description": "Network Profile for K8s Dev Cluster",

"parameters": {

"lb_size": "medium",

"t0_router_id": " c76ce484-c55e-4ec9-9bab-d0199ae298fd",

"fip_pool_ids": [

"e50e8f6e-1a7a-45dc-ad49-3a607baa7fa2"

],

"pod_routable": true,

"pod_ip_block_ids": [

"ebe78a74-a5d5-4dde-ba76-9cf4067eee55",

"ebe78a74-a5d5-4dde-ba76-9cf4067eee56"

]

"master_vms_nsgroup_id": "9b8d535a-d3b6-4735-9fd0-56305c4a5292",

"pod_subnet_prefix" : 26

}

}For the Parameters section, one or more parameters could be configured as needed.

You can notice in above profile that the Load Balancer size is Medium. If we have not defined a size, the default size, which is Small, will be used. Here you can see a sample of NSX-T Load Balancer sizes in NSX-T 2.4 (scalability may change based on the release)

| Load Balancer Service |

Small |

Medium |

Large |

| Number of virtual servers per load balancer |

20 |

100 |

1000 |

| Number of pools per load balancer |

60 |

300 |

3000 |

| Number of pool members per load balancer |

300 |

2000 |

7500 |

Note the choice of the T0-Router

Using Network Profiles, a separate T0-Router could be used for that cluster which will allow for Network segregation on the Physical Network Level. The T0-Router UUID could be captured from the NSX-T Manager GUI. If we have not configured a Network Profile, then that Kubernetes Cluster will be attached to the default T0-Router configured during Enterprise PKS Installation. Same goes for other parameters, default configuration from the PKS Installation could be overwritten using Network Profiles.

Another important configuration to notice:

“pod_routable": true

Above input will instruct NSX to assign a routable subnet to the pods from the pod_ip_block_ids. The default would be to use NAT from the default IP Block configured during PKS Installation on the PKS Tile in the Operations Manager.

More info on the JSON file template could be found here.

Now we created the JSON file, let’s move forward and create the Network profile.

Create a Network Profile

To create a network profile, a single PKS command will be used pointing the JSON file created in the previous step. Let us take a look at all Enterprise PKS commands using pks –-help list all PKS commands (Enterprise PKS 1.4).

localadmin@pks-client:~$ pks --help

The Pivotal Container Service (PKS) CLI is used to create, manage, and delete Kubernetes clusters. To deploy workloads to a Kubernetes cluster created using the PKS CLI, use the Kubernetes CLI, kubectl.

Version: 1.4.0-build.194

Usage:

pks [command]

Available Commands:

cluster View the details of the cluster

clusters Show all clusters created with PKS

create-cluster Creates a kubernetes cluster, requires cluster name, an external host name, and plan

create-network-profile Create a network profile

create-sink Creates a sink for sending all log data to syslog://

delete-cluster Deletes a kubernetes cluster, requires cluster name

delete-network-profile Delete a network profile

delete-sink Deletes a sink from the given cluster

get-credentials Allows you to connect to a cluster and use kubectl

get-kubeconfig Allows you to get kubeconfig for your username

help Help about any command

login Log in to PKS

logout Log out of PKS

network-profile View a network profile

network-profiles Show all network profiles created with PKS

plans View the preconfigured plans available

resize Changes the number of worker nodes for a cluster

sinks List sinks for the given cluster

update-cluster Updates the configuration of a specific kubernetes cluster

Flags:

-h, --help help for pks

--version version for pks

Use "pks [command] --help" for more information about a command.

We will use the below command to create the Network Profile.

localadmin@pks-client:~$ pks create-network-profile k8s-dev-cluster.json

Network profile k8s-dev-cluster successfully created

Created Network Profiles could be listed using the below command.

localadmin@pks-client:~$ pks network-profiles

Name Description

k8s-dev-cluster Network Profile for K8s Dev Cluster

Now that we have our Network Profile, let’s create a Kubernetes cluster.

Create a Kubernetes Cluster with Enterprise PKS Network Profile

To create a Kubernetes Cluster in Enterprise PKS with a network profile, run the following command:

$ pks create-cluster CLUSTER-NAME --external-hostname HOSTNAME --plan PLAN-NAME --network-profile NETWORK-PROFILE-NAME

Example:

$ pks create-cluster k8s-dev-cluster --external-hostname k8s-dev-cluster.vmware.com --plan small --network-profile k8s-dev-cluster-np

Note: “plan” is the Kubernetes cluster size which is configured in PKS tile in Ops-Manager during PKS Installation.

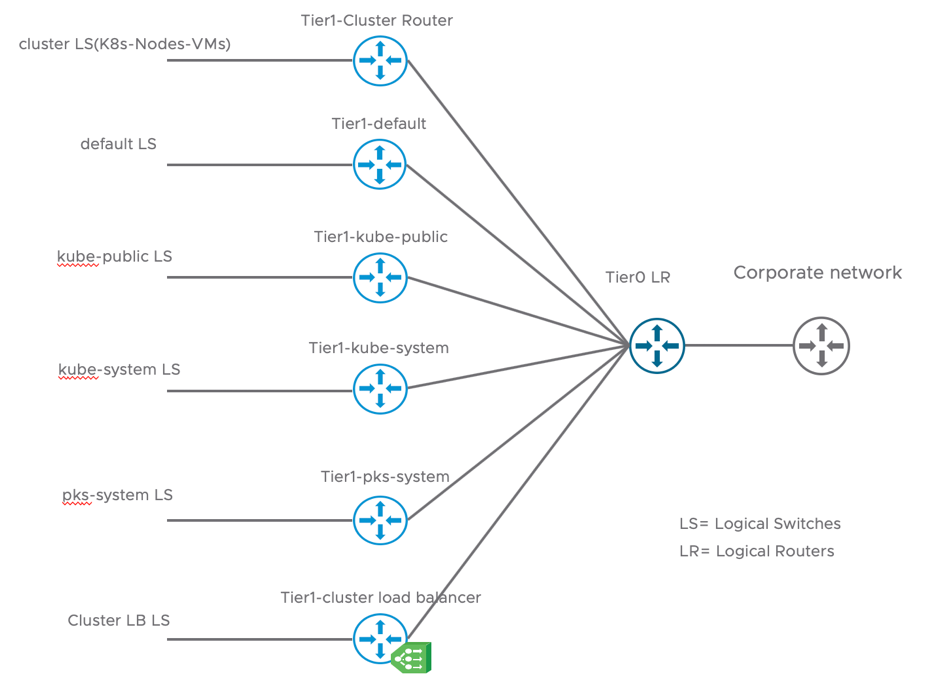

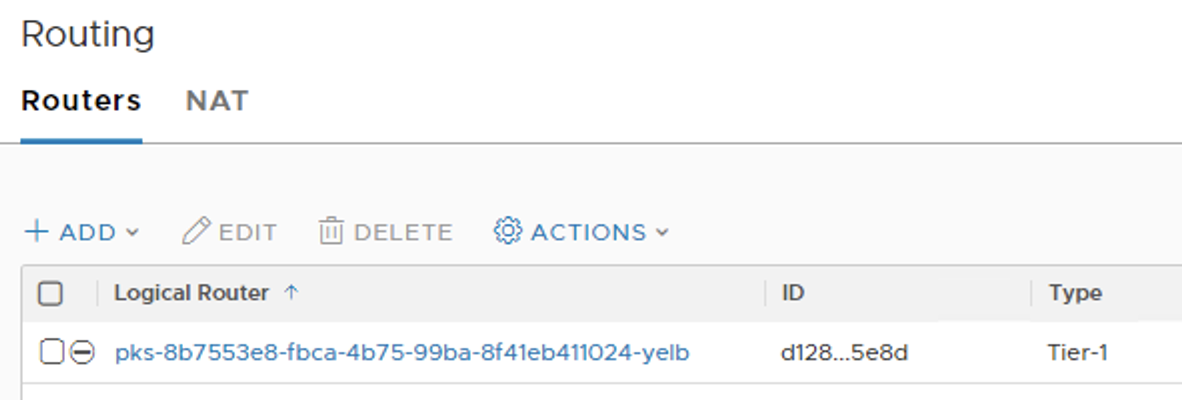

When we create the cluster, NSX-T will react based on the Network Profile config. Independently if there is a Network Profile or not, NSX-T will create six T1-Routers, one for the K8s-Nodes VMs (cluster-router) and four for the default Names Spaces (default, kube-public, kube-system, pks-system) and one for Load Balancing.

All the objects related to a cluster, will include the UUID of the cluster in their names. In our example the UUID of the cluster is 8b7553e8-fbca-4b75-99ba-8f41eb411024

It can be found using below pks command:

$ pks clusters

Name Plan Name UUID Status Action

demo-cluster-01 medium 8b7553e8-fbca-4b75-99ba-8f41eb411024 succeeded CREATE

We can see that six T1-Routers are created in NSX-T:

![]()

Six Logical Switches are created in NSX-T Logical Switches section:

![]()

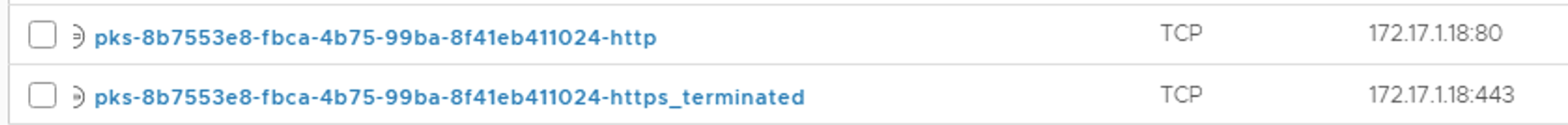

Two Load Balancer VIPs created are used for Kubernetes Ingress. One for HTTP and another one for HTTPs.

![]()

Note: in a Kubernetes environments other than Enterprise PKS, NCP could create a load balancer or utilize an existing one based on what is configured in the NCP.ini in below field.

lb_service = <name_or_uuid>

Here is a logical diagram for created Logical Routers and Switches to make it more clear:

![]()

Each Logical Switch will have a Tier1 Logical Router as a Default Gateway. For the Tier1-Cluster Router, the first available subnet from Nodes-IP-Block will be used with the first IP address as the Default Gateway.

- For all Kubernetes Name Spaces, a subnet will be derived from the Pods-IP-Block

- For the Load Balancer VIP, An IP Address will be used from the IP-Pool-VIPs

A SNAT entry will be configured in the Tier0 Logical Router for each Name Space using the IP-Pool-VIPs since it is the Routable one in the enterprise network.

A Load Balancer VIP will be placed on front of the Kubernetes Master VM(s). That VIP IP is taken from the IP-Pool-VIPs. This IP address is extremely important since it is going to be the main IP to access the Kubernetes Cluster. A DNS entry needs be created to that IP address to access the Kubernetes Cluster. The external IP address for the cluster could be found in NSX-T manager GUI or from PKS CLI as shown below in red,

localadmin@pks-client:~$ pks cluster demo-cluster-01

Name: demo-cluster-01

Plan Name: medium

UUID: 8b7553e8-fbca-4b75-99ba-8f41eb411024

Last Action: CREATE

Last Action State: succeeded

Last Action Description: Instance provisioning completed

Kubernetes Master Host: demo-cluster-01.vmwdxb.com

Kubernetes Master Port: 8443

Worker Nodes: 2

Kubernetes Master IP(s): 172.17.1.17

In the above example, A DNS entry should be created to map demo-cluster-01.vmwdxb.com to 172.17.1.17 before accessing the cluster with “pks get-credentials” command.

NSX GUI:

![]()

Kubernetes Operations with NSX-T

This section is not only restricted to PKS, it could be applied to any Kubernetes Distribution.

Before I start with mapping Kubernetes Networking Objects to NSX-T Objects, here is an overview mapping of the Kubernetes Networking Objects to traditional Networking Objects that will help the networking admins have a better understanding. I’m probably oversimplifying it here, but it will help show what we are trying to achieve for the Networking Admin.

| Kubernetes Object |

Traditional Networking terms |

Notes |

| Name Space |

Tenant |

Native K8s feature |

| Kubernetes Ingress |

North-South Layer 7 Load Balancer (HTTP-HTTPS) |

Needs and external Ingress Controller |

| Service Type Load Balancer |

North-South Layer 4 Load Balancer |

Needs a cloud provider’s load balancer to work |

| Service Type ClusterIP |

East-West Layer 4 Load Balancer |

Native K8s feature using KubeProxy |

| Service Type NodePort |

NAT Pod IP/Port to Node IP/Port |

Native K8s feature using KubeProxy |

| Kubernetes Network Policy |

Access Control List (ACL) |

Needs a CNI plugin that support Network Policy |

In this section I want to describe how would NSX-T will react to Kubernetes operations. One of the main design principles in NSX-T CNI integration with Kubernetes is not to stand in the way of the developer by automating the creation of all needed Networking and Security constructs in NSX-T.

Let’s take a closer look at how NSX-T will react to different Kubernetes Operations related to Networking and Security.

Name Space Creation (NAT Mode)

Let’s create our first Kubernetes Name Space and see how NSX-T will react to that.

$ kubectl create ns yelb

namespace/yelb created

Now let’s take a look at NSX-T and do a simple search for any items with the name yelb

T1-Router is created for that Name Space:

![]()

Logical Switch Created for that Name Space:

![]()

The above Logical switch will be attached to the respective Logical Router. A subnet will be derived from the Pods-IP-Block with the first IP address assigned to the Router Port to act as a Default Gateway for the Pods in that Name Space.

A SNAT entry will be configured in the Tier0 Logical Router for each Name Space using the IP-Pool-VIPs since it is the pool with external connectivity.

Name Space Creation (No-NAT Mode)

NSX-T has the ability to create a Name Space with direct routing to the Pods IP Addresses.

To do that, we need to add an annotation to the Name Space YAML file as shown below:

admin@k8s-master:~$ vim no-nat-namespace.yaml

apiVersion: v1

kind: Namespace

metadata:

name: no-nat-namespace

annotations:

ncp/no_snat: "true“

admin@k8s-master:~$ kubectl apply -f no-nat-namespace.yaml

Note: even though NSX-T NCP always supported Routable Pods Network, this feature is only supported in PKS 1.3 and later. To configure Routable Pods Network in PKS, a Network Profile will need to be configured as shown in the below example:

np_customer_B.json

{

"name": "np-cust-b",

"description": "Network Profile for Customer B",

"parameters": {

"lb_size": "medium",

"t0_router_id": "5a7a82b2-37e2-4d73-9cb1-97a8329e1a92",

"fip_pool_ids": [

"e50e8f6e-1a7a-45dc-ad49-3a607baa7fa2"

],

"pod_routable": true,

"pod_ip_block_ids": [

"ebe78a74-a5d5-4dde-ba76-9cf4067eee55",

"ebe78a74-a5d5-4dde-ba76-9cf4067eee56"

]

"master_vms_nsgroup_id": "9b8d535a-d3b6-4735-9fd0-56305c4a5292",

"pod_subnet_prefix" : 26

}

}In other Kubernetes environments, other than Enterprise PKS, the Routable IP block will be pointed to in the ncp.ini no_snat_ip_blocks =

NSX-T NCP Configuration could be found here.

Service Type Load Balancer

Kubernetes Service Type LoadBalancer will provision an external Layer-4 Load Balancer depending on the Cloud Provider. For example, using this feature in AWS will provision an ELB.

In NSX-T, the Load Balancer is already provisioned during the cluster creation. Deploying a service type LoadBalncer will configure the VIP, and the Servers Pool as per the specs specified on the YAML file. Let’s take a look at the below example.

apiVersion: v1

kind: Service

metadata:

name: yelb-ui

labels:

app: yelb-ui

tier: frontend

namespace: yelb

spec:

type: LoadBalancer

ports:

- port: 80 #<< VIP Port Number

protocol: TCP

targetPort: 80 #<< Pool Port Number

selector:

app: yelb-ui #<<Dynamic Membership for the Pool based on Labels

tier: frontend

$ kubectl apply -f yelb-ui.yaml

$ kubectl get svc -n yelb

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

yelb-ui LoadBalancer 10.100.200.205 100.64.128.33,172.17.1.6 80:32059/TCP 6d

The IP address 172.17.1.6 in above example, is the external VIP IP which was assigned from the IP-Pool-VIPs from NSX-T IPAM. We can access our application using this IP address.

If you are wondering what the other IP address is (100.64.128.33), it’s the internal IP of the Load Balancer Service. This IP could be used to be matched in a Kubernetes Network Policy.

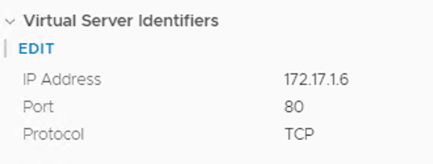

In NSX-T, a new VIP is created with the External IP address as shown in kubectl get svc:

![]()

VIP Port Number as per the YAML file

![]()

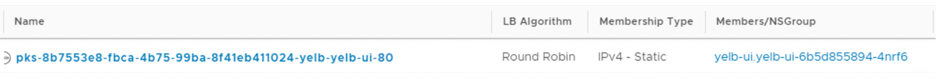

A server pool is created,

![]()

There is a dynamic membership in the pool based on Kubernetes Labels specified in the YAML file. Let’s try to scale our deployment and see if NSX-T will pick that up in the LoadBalancer Pool

$ kubectl scale --replicas=3 deploy/yelb-ui

Let’s take a look to our Pool:

![]()

Perfect!

In this section we showed how to create an External L4 Load Balancer in Kubernetes and how the specs will be mapped in NSX-T.

Kubernetes Ingress

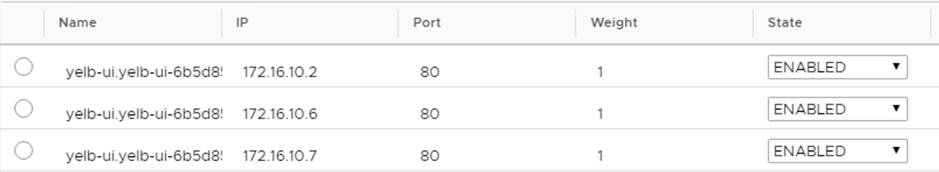

In most of Kubernetes deployments, Kubernetes Ingress will need an Ingress Controller such as Nginx Ingress Controller, HA Proxy or OpenShift Router. With NSX-T, Kubernetes Ingress is handled by the same Load Balancer used for Service Type LoadBalancer.

During the Kubernetes cluster creation, NSX-T created a load balancer with two VIPs: one for HTTP, and another one for HTTPS.

![]()

The External IP addresses was already assigned. Let’s see what will happen when we create a Kubernetes Ingress:

apiVersion: extensions/v1beta1

kind: Ingress

metadata:

name: test-ingress

#if HTTPS termination is required, a Kubernetes Secret should be added

spec:

rules:

#the host name or URL to access the application

- host: rest-review.vmwdxb.com

#only HTTP is supported in the server pool side with NSX-T as of today.

http:

#we can use different paths to access different Kubernetes services

paths:

- path:

#a service to group the pods that we need to access

backend:

serviceName: yelb-ui

servicePort: 80

Note: for HTTPS termination, a Kubernetes Secret should be added under the (spec:) as below example

spec:

tls:

- hosts:

- rest-review.vmwdxb.com

secretName: testsecret-tls

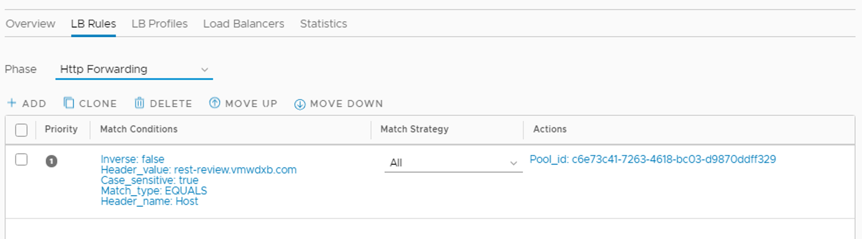

If we take a look at NSX-T, we should find a new HTTP forwarding rule based on the path specified in the YAML in the HTTP/HTTPS VIP that was created during the cluster creation. In the case of HTTPS, NSX-T will use the Kubernetes Secret for the HTTPS termination.

![]()

Kubernetes Network Policy

Kubernetes Networks Policy requires a CNI plugin to enforce the policy. In this section I will explore how NSX-T will react when a Kubernetes Network Policy is configured, but before that I need to clarify something very important in Network Security Operations in Kuberentes environments with NSX-T.

There are two ways to restrict the access to Kubernetes pods with NSX-T

- Kubernetes Network Policy: the definition of Kubernetes Network Policy is done by the DevOps admin which is sometimes called SRE or PRE. NSX-T will automatically create the Security Group and enforce the policy based on the Kubernetes Network Policy YAML definition.

- Predefined Label Based Rules: typically the Security Group and Policy will be created by the Security Admin from NSX-T with a membership criteria that is based on Kubernetes Objects and labels.

Which one should you use?

The answer to that question depends on the Operations model in the organization. My recommendation is to use a mix of both. The good thing in NSX-T is that all rules will be visible in a centralized place in NSX-T manager Firewall Dashboard regardless of what methodology is used to create them.

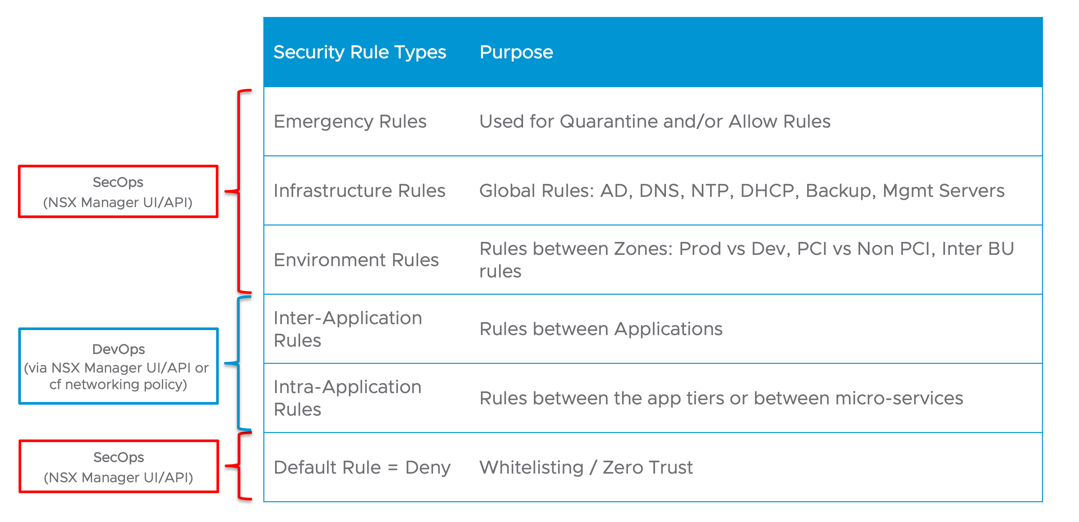

In NSX-T Distributed Firewall we support the concept of Firewall Sections, higher Sections are higher priority than lower ones and will be applied first. Predefined Security Rules could be created by the security admin in highest and lowest sections to create rules related to infrastructure services such DNS, LDAP, NTP and rules between different Kubernetes Clusters and Name Spaces. The Lowest Section will include the default deny rule.

The Middle Section could be handled by the DevOps team within a cluster or a Name Space to give them the ability to test their applications without the need to go back to the SecOps.

![]()

So let’s go back to Kubernetes Network Policy. Here is a simple example of Kubernetes Network Policy:

apiVersion: networking.k8s.io/v1

kind: NetworkPolicy

metadata:

name: test-network-policy

namespace: default

spec:

podSelector:

matchLabels:

app: yelb-appserver #<< Selector for Destination Pods

policyTypes:

- Ingress. #<< it could be Ingress, Egress or both

ingress:

- from:

- podSelector:

matchLabels:

app: yelb-ui. #<< Selector for Source

ports:

- protocol: TCP #<< Port Number to Open

port: 4567

Here’s more info about Kubernetes Network Policy.

Now let’s have a look how NSX will react to the above Kubernetes Network Policy by checking NSX-T Distributed Firewall.

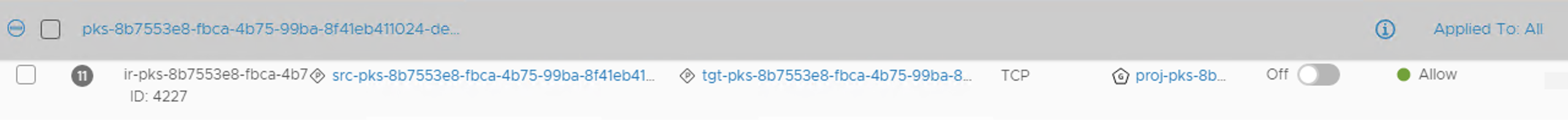

As you can see below, the Source, Destination and port numbers are automatically created in NSX-T:

![]()

You can see that automatic groups created for the Network Policy, one for the Source which starts with src- and another one for target starts with tgt- with TCP 4567 allowed.

Assigning a Static IP Address to a Kubernetes Service

With NSX-T, we can assign a set of Kubernetes Pods a specific IP Address or a group of SNAT IP Addresses to source their traffic from.

This IP or group of IPs will override the Name Space SNAT IP address. The benefit of that is a predictable IP for a Pod or group of Pods to make it easily identifiable in the physical infrastructure. That will help in case we want to use existing physical firewalls or any other physical devices.

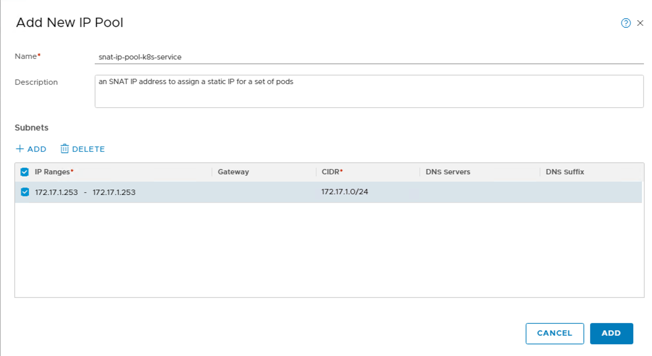

The first thing we need to do is define a new IP-Pool in NSX-T. That Pool could have a single IP address or a group of IP address. In this example I am using a single IP.

![]()

The only thing we need to do is add an annotation “ncp/snat_pool: SNAT-IP-POOL-NAME” to any Kubernetes service as below:

apiVersion: v1

kind: Service

metadata:

name: svc-example

annotations:

ncp/snat_pool: snat-ip-pool-k8s-service

selector:

app: example

Don’t forget to apply the YAML file ![🙂]()

Troubleshooting

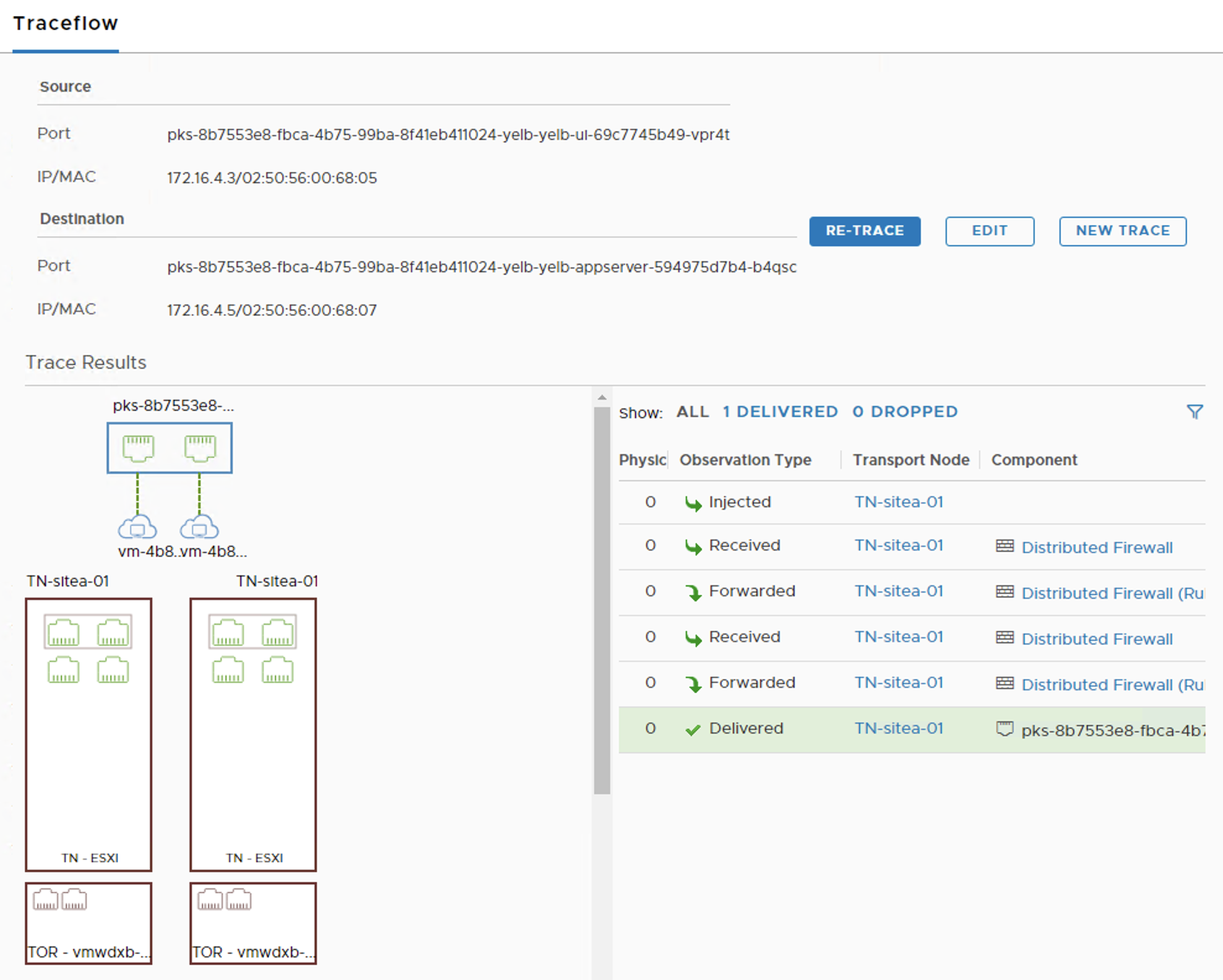

The easiest way to troubleshoot connectivity between Pods or between Pods & VMs is to use NSX-T Traceflow. Traceflow could emulate any kind of traffic and it will show what is blocking it in case a Firewall Rule or a Kubernetes Network Policy is blocking that traffic.

![]()

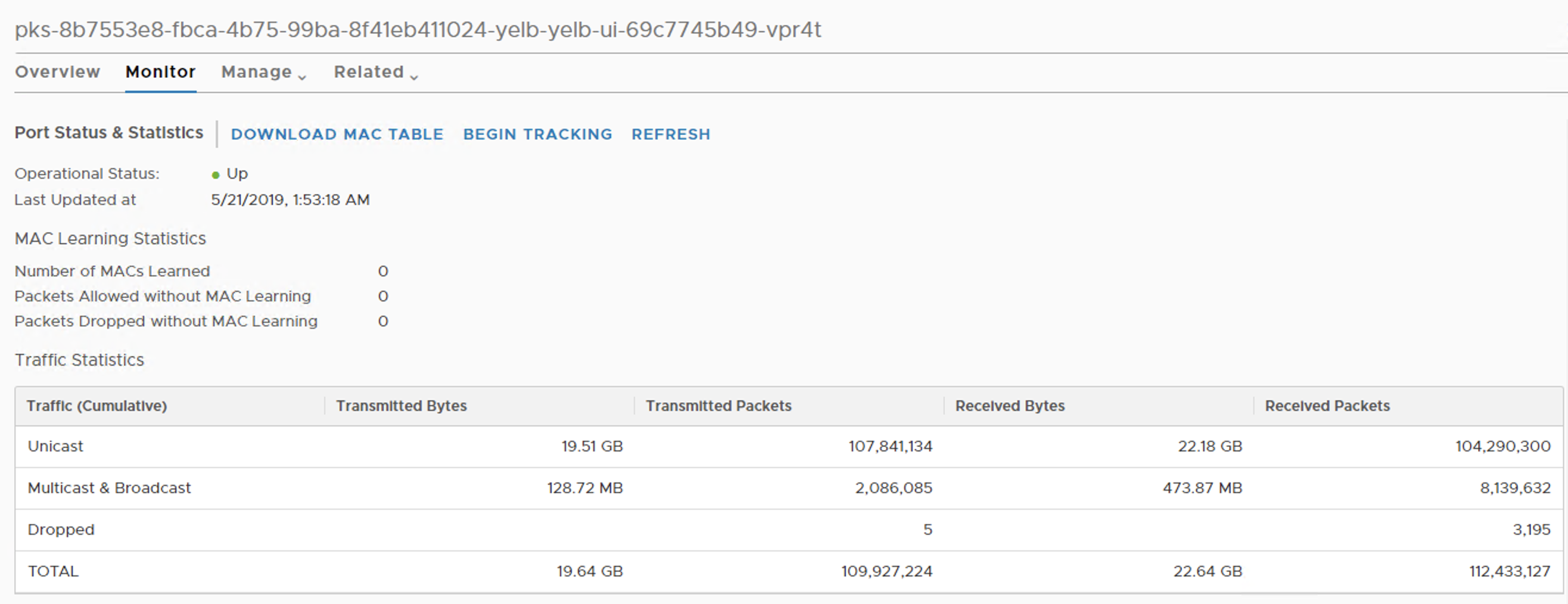

With NSX-T we could see Pods IP addresses, LB-Pools Health, Port counters etc. all from NSX-T GUI in a way that is friendly to the Operations Team.

Below is a capture of the Logical Port view that a Kubernetes Pod is connected to where some stats could be seen related to sent and received traffic to that Pod.

![]()

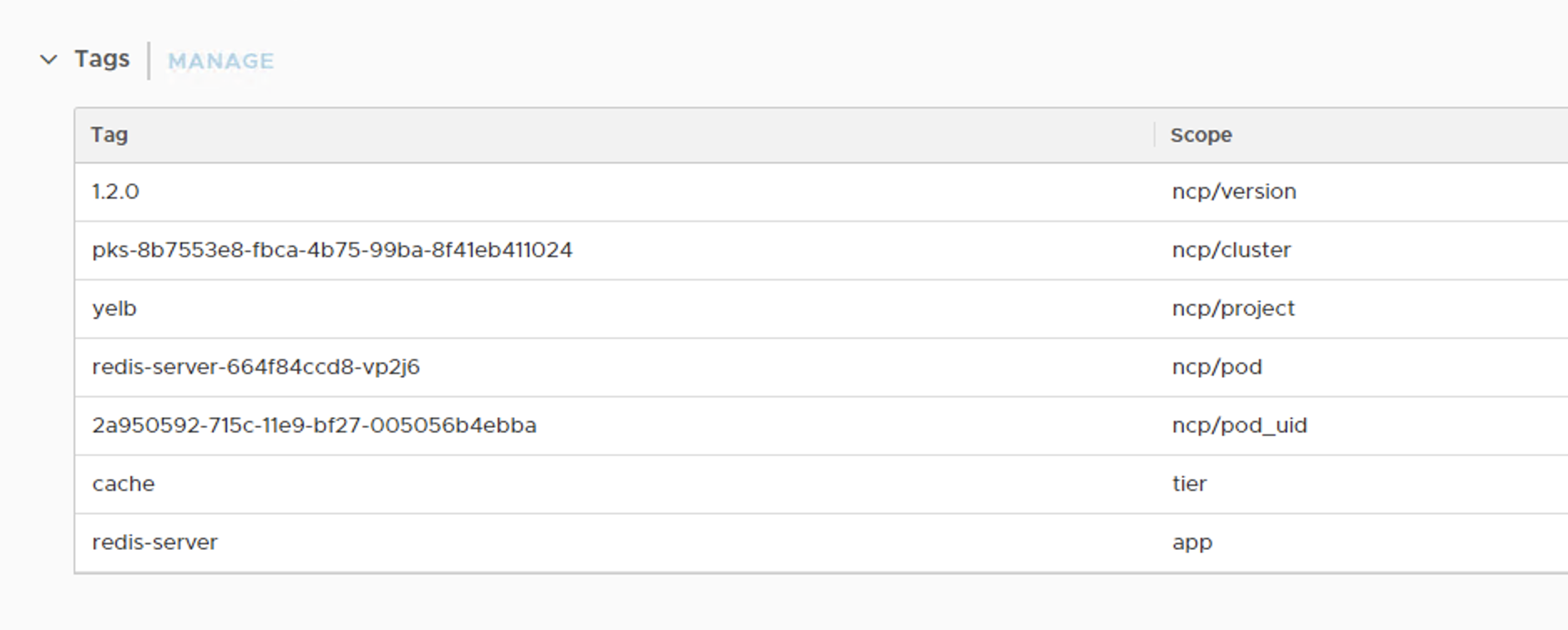

Another important point to notice is “the Tags”. By checking the Logical Port Tags, we could find a lot of useful info such as the Pod Name, Name Space (Project), and all other Kubernetes Tags as shown below for a Redis Pod,

![]()

For more visibility, vRealize Network Insight Could be used. Starting vRNI 4.1, we could use it for monitoring and getting visibility in Kubernetes and Enterprise PKS environments. more info could be found in this blog.

Summary

In this blog I focused on how to operate NSX-T in Kubernetes environments.

- I started with an NSX-T Deployment and Integration with Kubernetes and Enterprise PKS overview

- How to use Enterprise PKS Network Profiles

- How Kubernetes Objects are mapped to NSX-T Objects.

As you could see in this blog, NSX-T does not stand in the way of the developer and provide the operations team the security and visibility they need to operate the environment.

Getting started? Test drive for free:

The post Kubernetes and VMware Enterprise PKS Networking & Security Operations with NSX-T Data Center appeared first on Network Virtualization.