This is the year of the service mesh. Service mesh solutions like Istio are popping up everywhere faster than you can say Kubernetes. Yet, with the exponential growth in interest also comes confusion. These are a few of the questions I hear out there:

- Where is the overlap between NSX Service Mesh with NSX Data Center?

- Is there synergy between the NSX Data Center and Istio?

- Can service mesh be considered networking at all?

These are all excellent and valid questions. I will try to answer them at the end of the post, but to get there let’s first understand what each solution is trying to achieve and place both on the OSI layer to bring more clarity to this topic.

*Note – I focused this post on NSX Data Center and Istio, to prevent confusion. Istio is an open source service mesh project. NSX Service Mesh is a VMware service delivering enterprise-grade service mesh, while it is built on top of Istio, it brings extensive capabilities beyond those that are offered by the Istio Open Source project.

Before we start, in a nutshell, what is Istio?

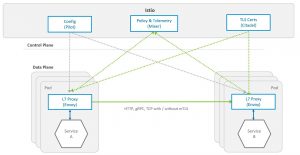

Istio is an open source service mesh project led by Google that addresses many of the challenges that come up with the rise of micro-services distributed architectures. A lot of attention is paid to networking, security and observability capabilities. We will not explain everything about service mesh and Istio, as that would require a book ![😊]() but to really summarize it:

but to really summarize it:

It is an abstraction layer that takes care of service to service communication (service discovery, encryption), observability (monitoring and tracing) and resiliency (circuit-breakers and retries), from the developer and onto a declarative configuration layer.

The data plane of Istio is based on Envoy which is by itself a very successful open source reverse proxy project started by Lyft. Istio adds a control plane on Envoy.

![]()

Why should you care?

Istio solves a long-time problem of middleware management, and according to Gartner, “by 2020, all leading container management systems (delivered either as software or as a service) will include service mesh technology, up from less than 10% of generally available offerings today.”

Source: Innovation Insight for Service Mesh 3 December 2018

We at VMware see it even more than just containers (no reason it cannot be extended to VMs, but that is a different subject).

NSX Data Center

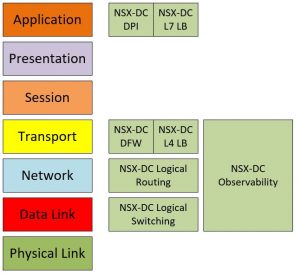

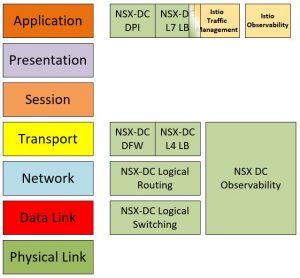

NSX Data Center delivers virtualized networking and security entirely in software, focusing its networking capabilities on layers 2-4 and some security and load balancing capabilities at layer 7. Here are the main components of NSX Data Center in their respective place in the OSI model

![]()

Logical Switching and Routing

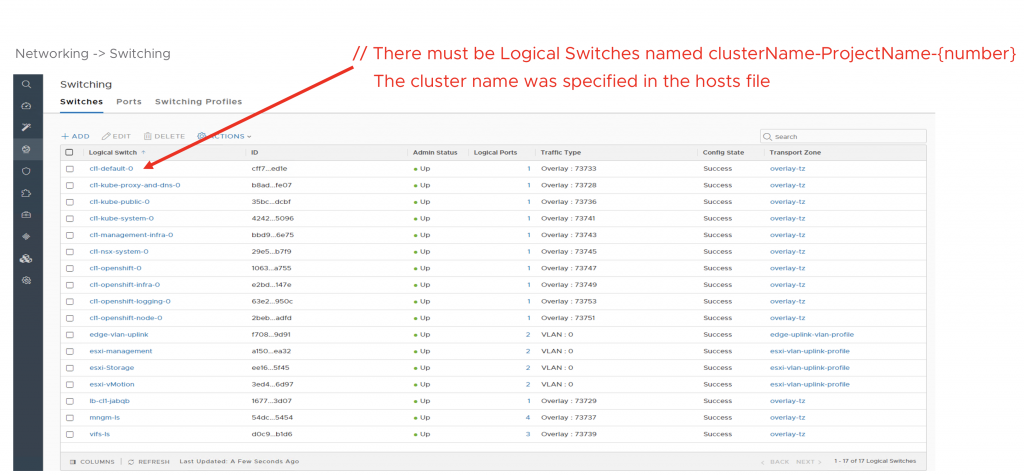

Switching is a layer 2 component and routing is a layer 3 component. That is basic networking instantiated in software.

Firewall

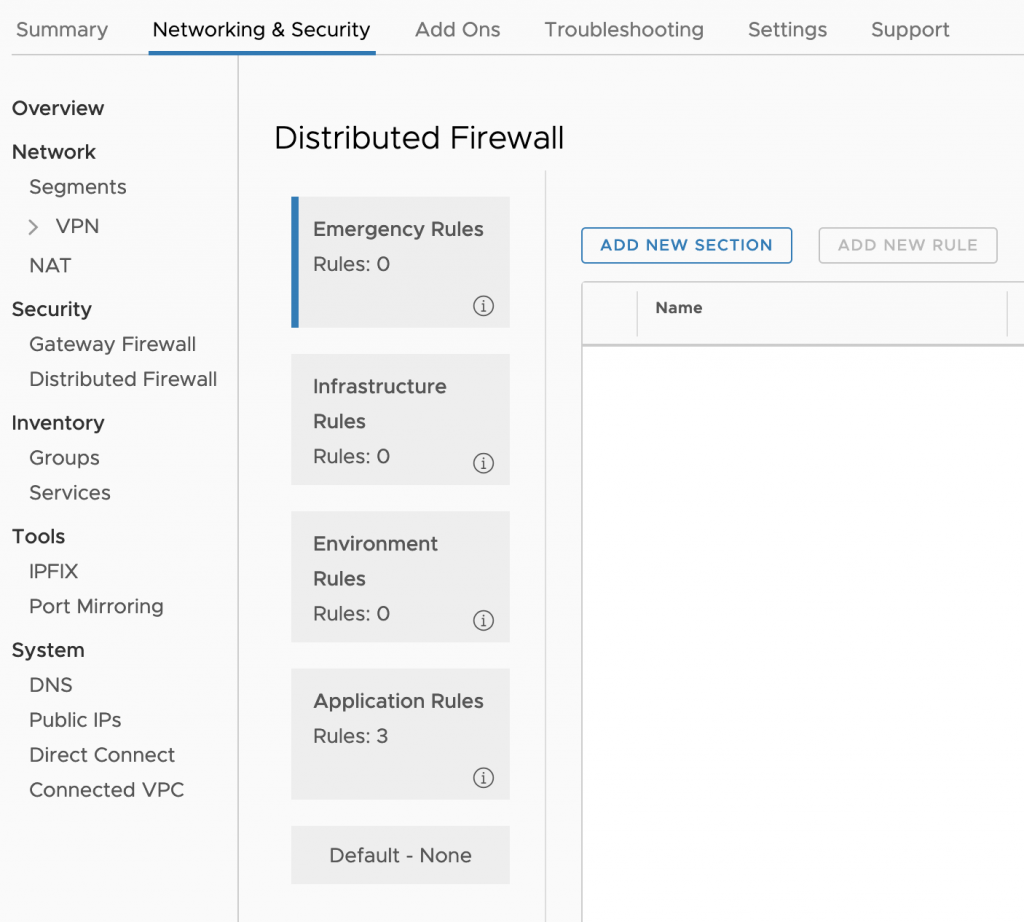

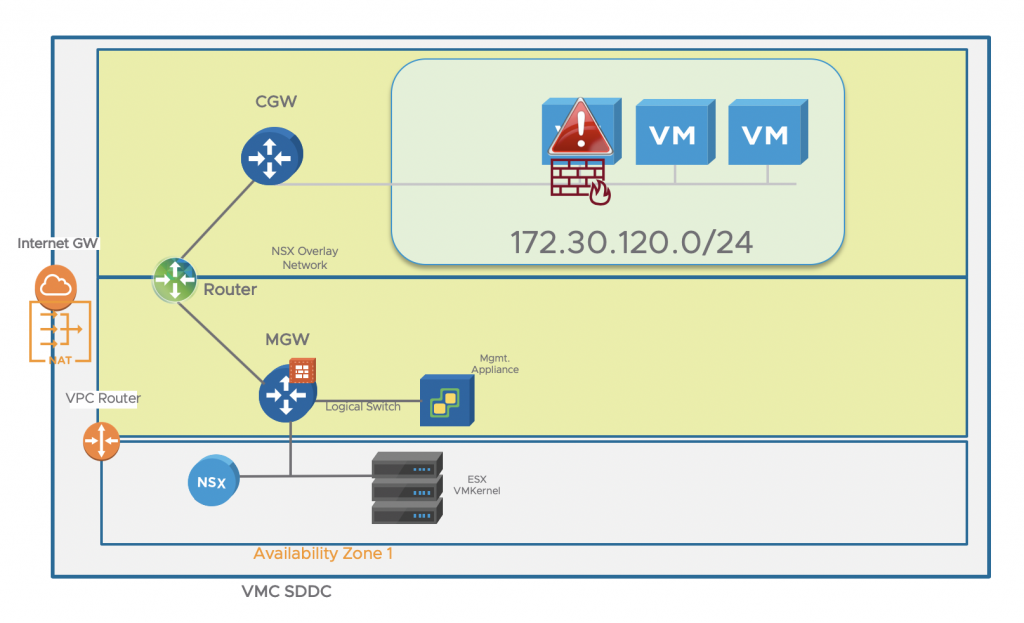

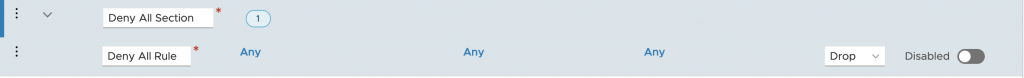

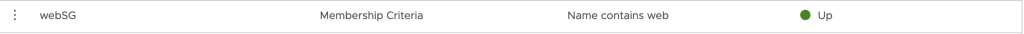

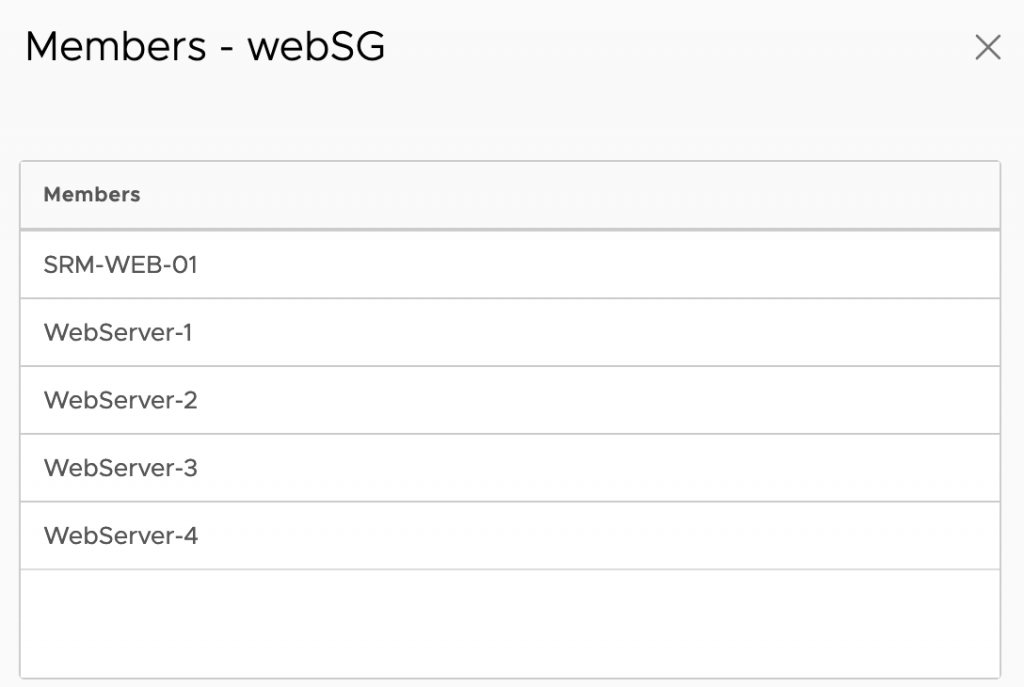

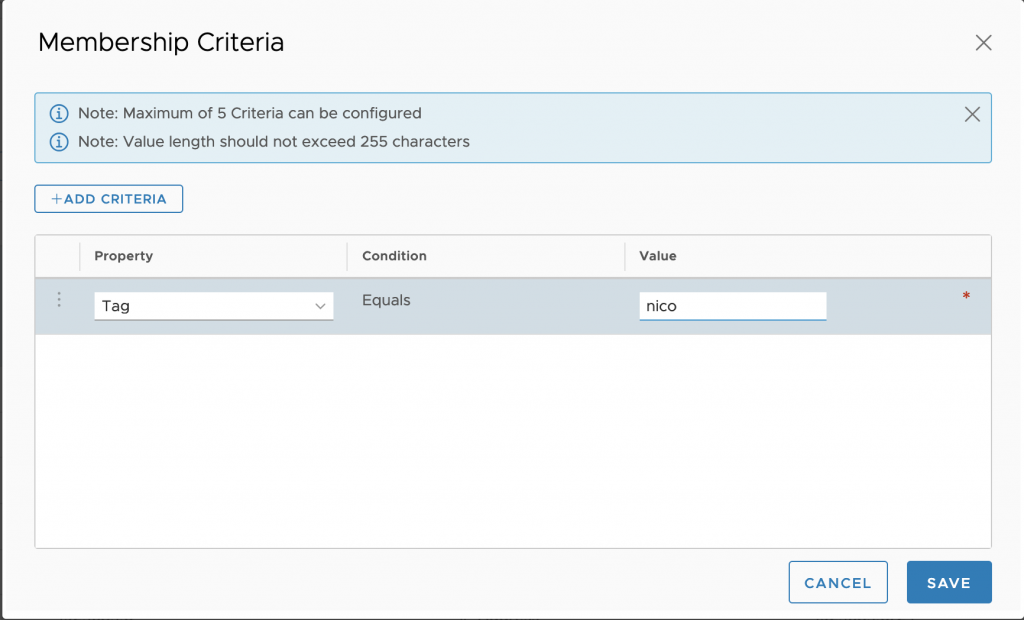

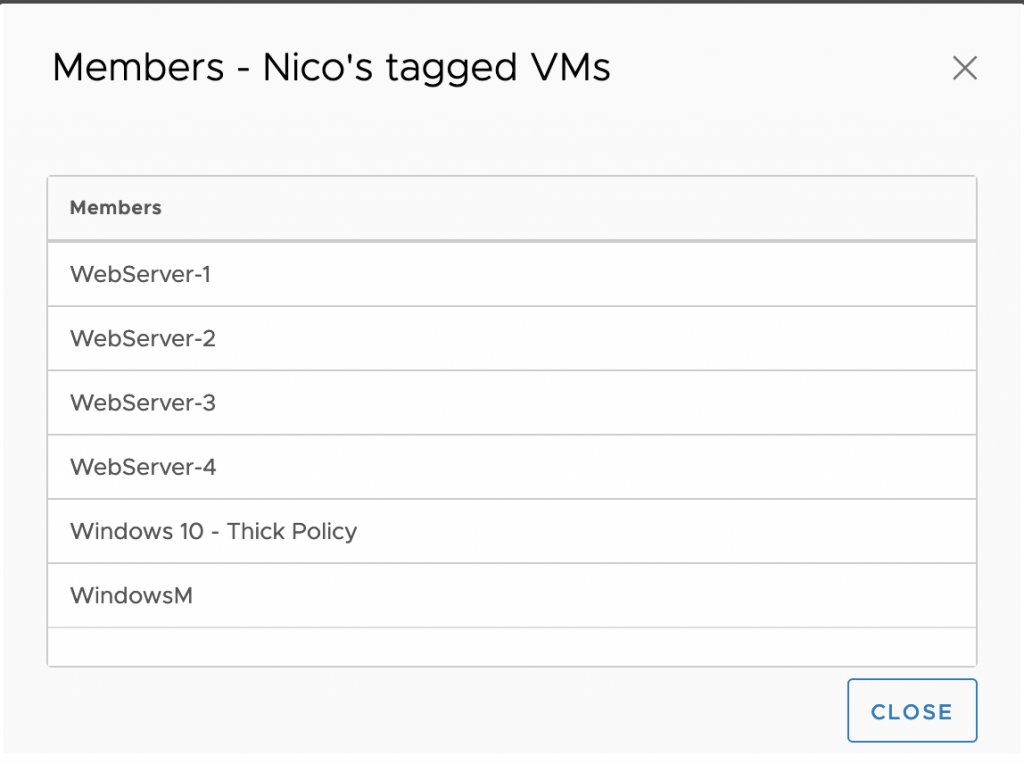

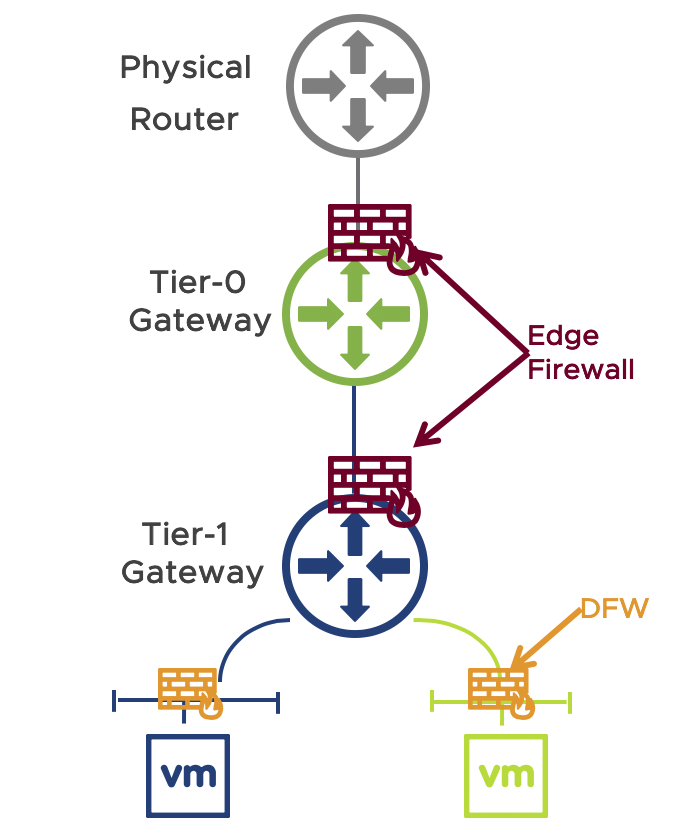

The NSX Data Center distributed firewall (DFW) is the basis for achieving micro-segmentation on L4, which is the ability to inspect each packet flowing between all application endpoints irrespective of network topology against a security policy. Ports that are not defined as allowed will not be able to be accessed. When implemented on vSphere or KVM, it is enforced within the hypervisor kernel.

NSX Data Center focuses on L3-L7 firewalling with added capabilities for L7 service insertion with next generation firewall partners such as Checkpoint and the enforcement is L4 (ports).

L7 Based Application Signatures (DPI)

With NSX-T Data Center 2.4, NSX Data Center added DPI (deep packet inspection) capabilities which is the ability to define L7 based application signatures in distributed firewall rules. Users can either use a combination of L3/L4 rules with L7 application signatures or they can create L7 application signature-based rules only. We currently support application signatures with various sub-attributes for server-server or client-server communications only.

Read this blog post for more information about NSX Data Center and DPI.

Load Balancing

NSX Data Center provides load balancing on layer 4 (TCP load balancing) and layer 7 (HTTP, HTTPS) with many enterprise level load balancing capabilities. This is a traditional edge load balancing implemented in software.

NSX Data Center Observability

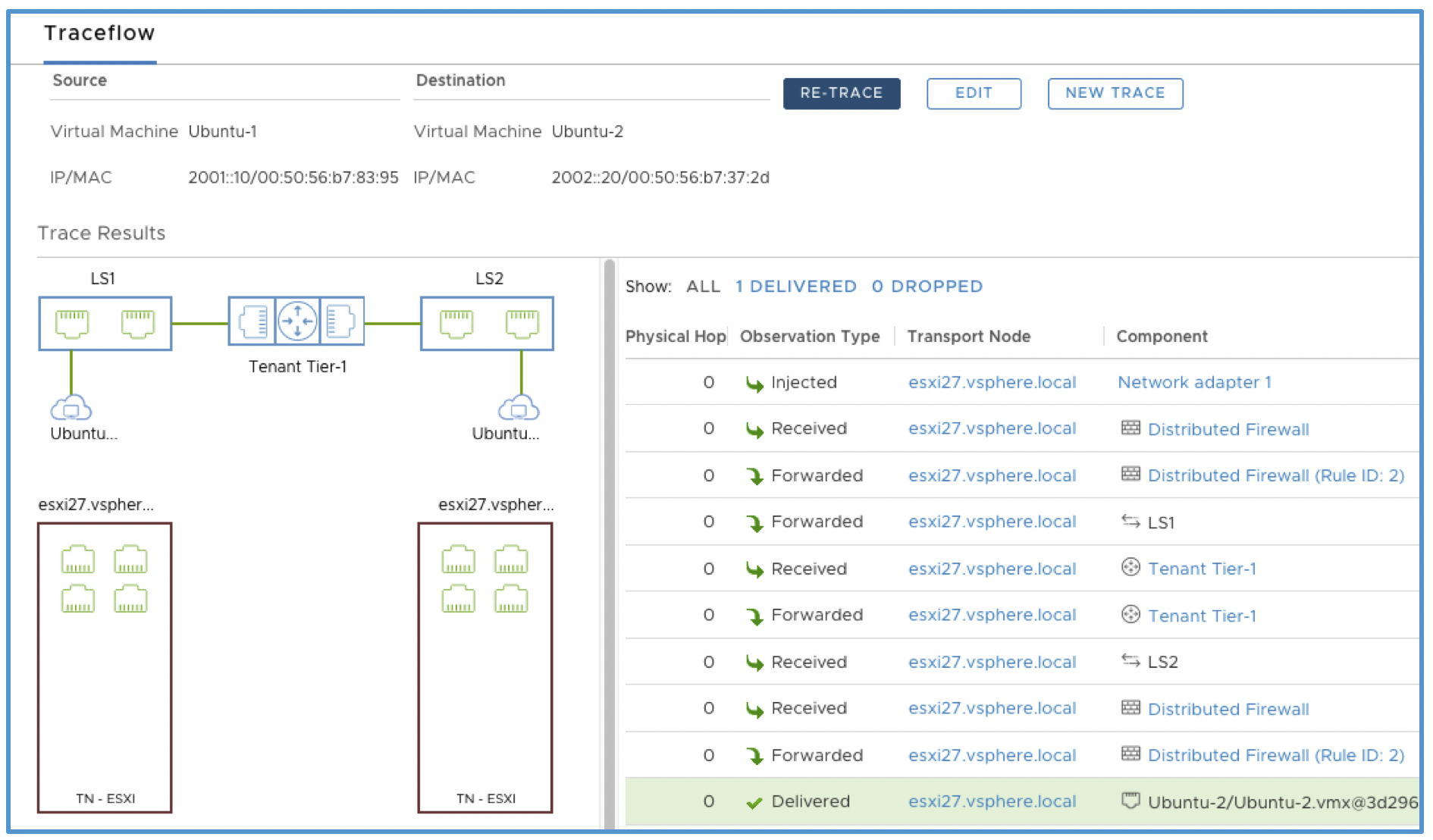

NSX Data Center observability capabilities are significant to our enterprise customer base and focus on L2-L4 networking and security. Among other things, we can provide visibility into the traffic the flows through NSX Data Center firewall (FW) and provide physical FW traffic relatability into the NSX Data Center logical networks and the platforms running with NSX Data Center using SNAT tools. Read this blog for more on NSX Data Center firewall.

As you can see, most capabilities concentrate on L2-L4 networking instantiated in software with extensive enterprise grade monitoring and operational tools.

Let’s look at service mesh now. I am concentrating on Istio, mainly because it is the front runner service mesh and because it is the first service mesh to be supported by NSX Service Mesh.

Traffic Management

Istio’s traffic management capabilities are based on the envoy L7 proxy, which is a distributed load balancer that is attached to each micro-service, in the case of Kubernetes as a sidecar. The placement of that load balancer (close to the workload) and the fact that all traffic flows through it allows it to be programmed with very interesting rules. Before placing it on the OSI layer I will quickly cover some of the main traffic management capabilities.

Also, there is an ingress and egress proxy for edge load balancing in Istio that I will touch upon as well.

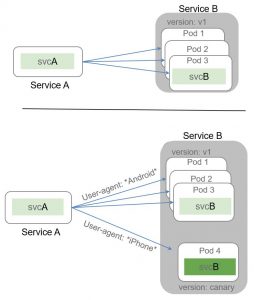

Traffic Splitting (sometimes called traffic shifting)

Provides the ability to send a percentage of traffic from one version of a service to another version of a service. Sometimes referred to as “canary upgrade” or “rolling upgrade”. As you can see in the diagram below, 1% of traffic hitting the front end service is directed to a new version of service B. If it looks good (no errors etc) more traffic will be shifted to “service B 2.0” until 100% of traffic has moved. The fact that it is L7 routing means that the decision where to send traffic is application based (service to service communications) and is unrelated to L3 routing which is IP based. This will be a common theme as you will see.

![]()

Traffic Steering

Allows us to control where incoming traffic will be sent based on application attributes (some web attribute) such as authentication (send Jason to service A and Linda to service B), location (UK to service A and US to service B), device (watch to service A and mobile to service B) or anything else the application passes. This was achieved in the past in different ways from the application code to libraries but with Istio we can configure that declaratively using a yaml file. Cool stuff!

![]()

Ingress and Egress Traffic Control

The Istio gateway is the same Envoy proxy, only this time it’s sitting at the edge. It controls traffic coming and going from the Mesh and allows us to apply monitoring and routing rules from Istio Pilot. This is very much like the traditional load balancing we know:

![]()

Now, let’s place Istio Traffic management on the OSI model

![]()

As we can see in the diagram above, all the traffic management capabilities are on the L7 traffic management and load balancing level. We can see some overlap here between Istio with NSX Data Cener LB on the edge load balancing in a purely functional sense, though we still need a load balancer in front of Envoy to send traffic to the Istio Gateway. The decision where to send traffic within the application would be for Istio. This is a common topology in container/micro-services platforms that is present in solutions like Pivotal Application services (PAS), where a reverse proxy is placed in front of the application and behind a traditional load balancer.

NSX Data Center provides ingress L4 load balancing that is not achieved by Envoy and is required for service management on Kubernetes and traditional applications. NSX Data Center also provides the enterprise level operational tools needed by enterprise customers. The NSX Data Center load balancer can send and manage traffic to the mesh through the ingress Envoy and to other entities that are not part of the mesh (not everything can be covered by service mesh, yet ). So, there is partial overlap, or more accurately, some of the intelligence is moved up the stack, however, a traditional edge LB is still very much needed.

Istio Observability and Telemetry

Istio provides significant capabilities for telemetry, again, all focused on L7. Let’s look at some of those:

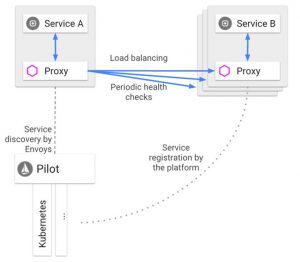

Service Discovery

While it is part of the load balancing act of Istio, I placed service discovery under observability as a category on its own. Istio’s Pilot component consumes information from the underlying platform service registry (e.g. Kubernetes) and provides a platform-independent service discovery interface. With this interface, tools like NSX Service Mesh can provide service observability (only NSX Service Mesh does that across Kubernetes clusters in multiple clouds and is not restricted to a site or a specific cluster).

![]()

Here is a sneak peek to NSX Service Mesh’s service discovery map:

![]()

Istio’s observability is focused on L7 and completes the picture with NSX Data Center that focuses on L4. When troubleshooting a micro-services problem, especially cascading issues, one needs to visualize the service dependencies and flows. Problems can occur on different levels, can be a service application issue, compute issue or a network issue. Having the full picture is a powerful thing that reduces the diagnostics and troubleshooting significantly. No overlap here:

![]()

Istio Security

Istio provides security features that are focused on the identity of the services. Even more so NSX Data Center service mesh, extends that uniquely to users and data. When we talk about identity, we focus on L7 (did I say that Istio is all about L7?). Here are a few key capabilities:

Mutual TLS Authentication

Istio’s service to service communication all flow through the envoy proxies. The Envoys can create mTLS tunnels between them where each service will have its certificate (identity) received from the Citadel component (the root CA of the mesh). The encryption is all happening on L7 meaning what gets encrypted is the payload, not the traffic. See my earlier post about mTLS and the OSI layer.

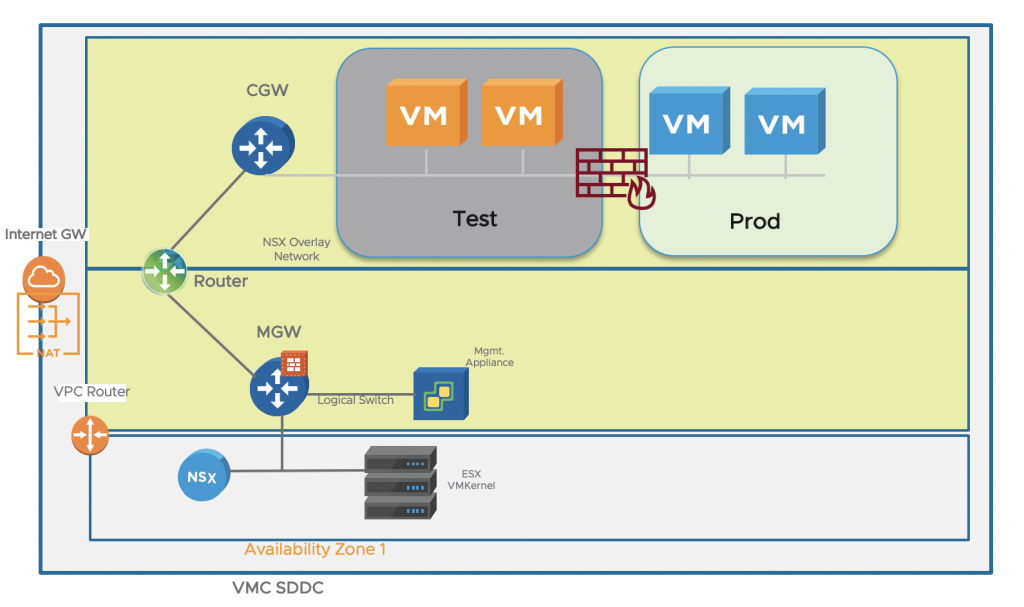

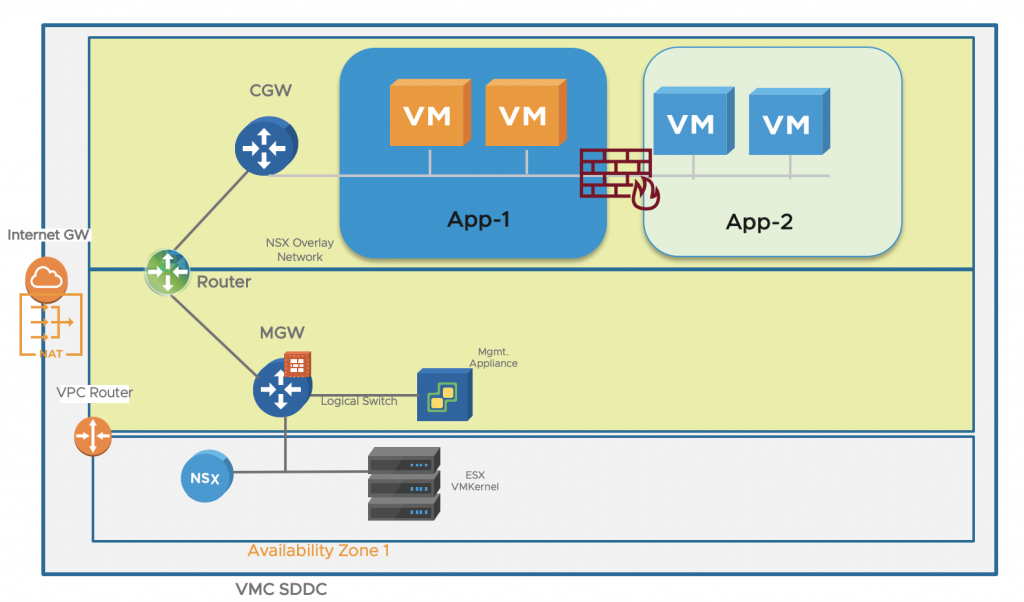

Istio Authorization

This is the micro-segmentation that Istio provides that I mentioned earlier. Istio authorization provides namespace-level, service-level, and method-level access control for services in an Istio mesh. It is called micro-segmentation because it is using the same notion that what is not defined as allowed to pass shall not pass, only this time the controls and enforcement is at L7 (service to service). The authorization service provides authorization for HTTP and provides micro-segmentation for services in an Istio mesh. Not to be confused with NSX Data Center micro-segmentation which operates on L4 based ports access.

Now, NSX Data Center DPI does provide L7 controls based on application signatures. But it does not cover service to service communication, and enforcement is done on L3-L4, hence there is no overlap with NSX Data Center micro-segmentation.

Istio can segment the services based on L7 constructs, such as:

RPC Level Authorization:

This method controls which service can access other services. Best described in the Istio docs:

Authorization is performed at the level of individual RPCs. Specifically, it controls “who can access my bookstore service”, or “who can access method getBook in my bookstore service”. It is not designed to control access to application-specific resource instances, like access to “storage bucket X” or access to “3rd book on 2nd shelf”. Today this kind of application-specific access control logic needs to be handled by the application itself.

Role-based Access Control with Conditions

This is method controls access based on User identity, plus a group of additional attributes. It’s the combination of RBAC (role-based access) with the flexibility of ABAC (Attribute-based access)

![]()

When leveraging Istio “micro-segmentation” at L7 one may wonder “do I need L4 micro-segmentation?” The correct answer is “absolutely yes”. Not having both layers of protection is like leaving your house open when your front gate is locked, the network is a hostile environment, just as we did not drop the perimeter firewall when we added East-West DFW with micro-segmentation, it doesn’t make sense to do the same with the addition of L7 controls and enforcement.

As security attacks become much more sophisticated, a multi-layered security approach is important as each layer focuses specifically on an area where attacks could happen. Here’s a more in-depth analysis of the layered approach with Istio.

![]()

To summarize, let’s go back to the original questions we asked:

- Where is the overlap between Istio and NSX Data Center?

As you can see the amount of overlap between the solutions is minuscule, and even where it exists (edge LB) we still need both. Matter of fact when it comes to security and network intelligence, more is better.

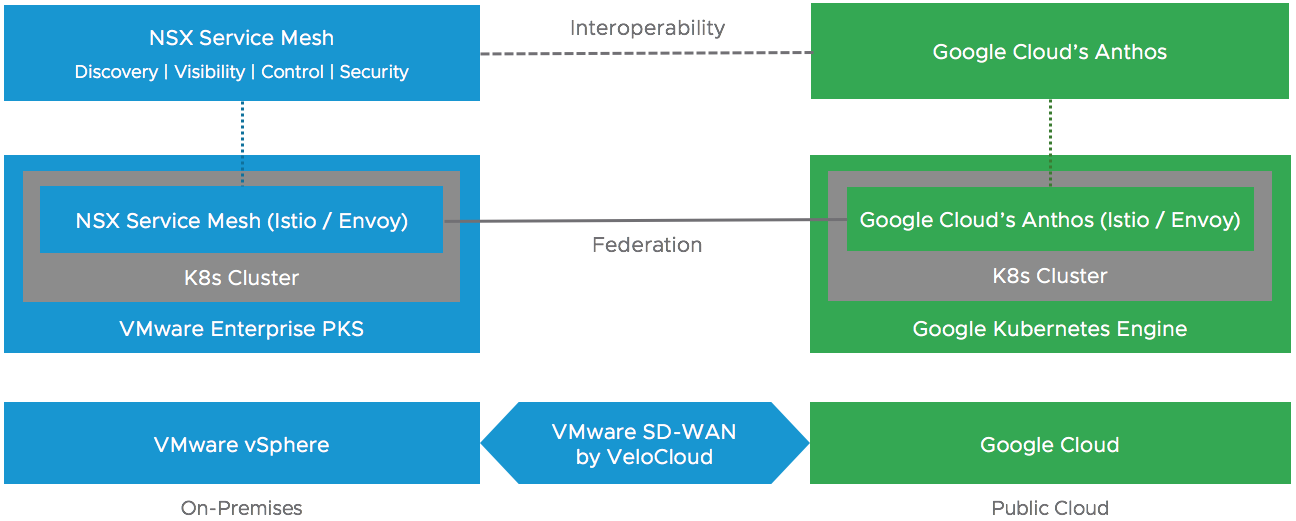

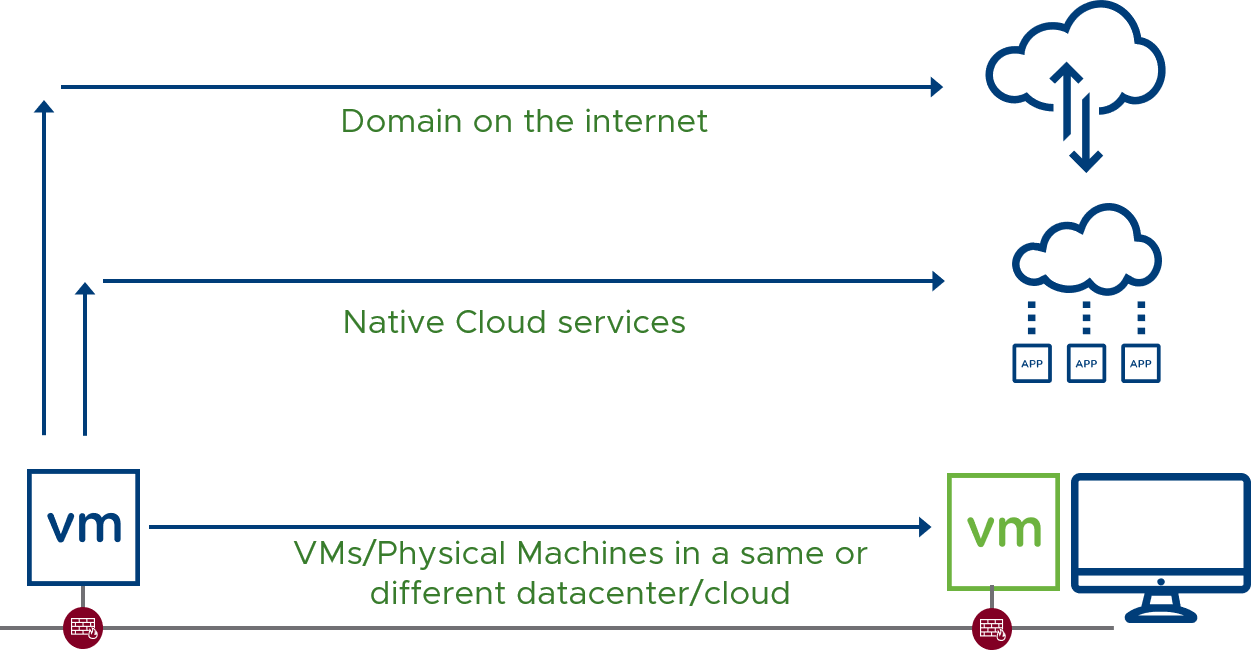

We’ve seen the Istio solution and NSX Data Center on the OSI model. Let’s see now VMware’s NSX Service Mesh solution with NSX Data Center on a logical diagram

![]()

2. Is there synergy between the solutions?

You will see the discussed synergies being realized soon. We are working on some important integration services around NSX Service Mesh and NSX-T Data Center, bringing the two solutions closer together.

To see where each one fits check out this physical diagram:

![]()

Beyond what we have discussed in this post you can see that NSX Data Center also covers a wider range of workloads, where NSX Service Mesh is focused on cloud native workloads (for now)

Lastly, can service mesh be considered networking at all?

In my view, since Istio lives in L7 where network and application boundaries start to blur, it can be considered networking and at the same time be an application platform.

What do you think?

The post How Istio, NSX Service Mesh and NSX Data Center Fit Together appeared first on Network Virtualization.

but to really summarize it:

but to really summarize it: